The saturation control of pretty much all image processing software is an unfortunate misnomer, to say the least. It actually controls either the chroma in Ych-like spaces (computed from CIE Yxy 1931, Yuv or YCbCr spaces), or some remote idea of saturation as used by HSL spaces, which are essentially a polar rewriting of RGB coordinates (usually expressed in sRGB space).

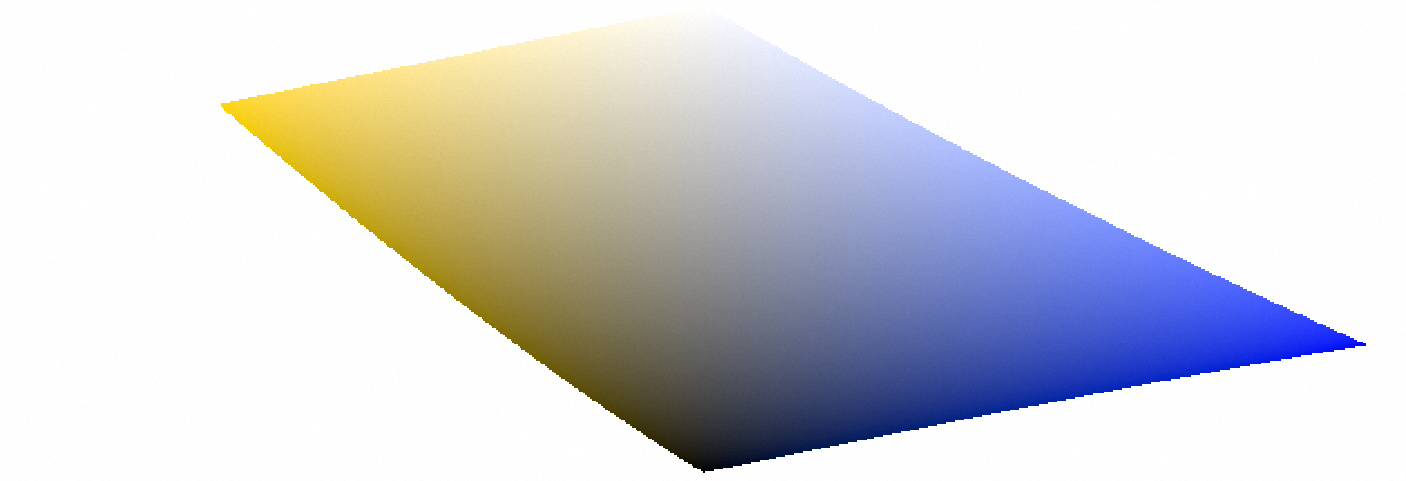

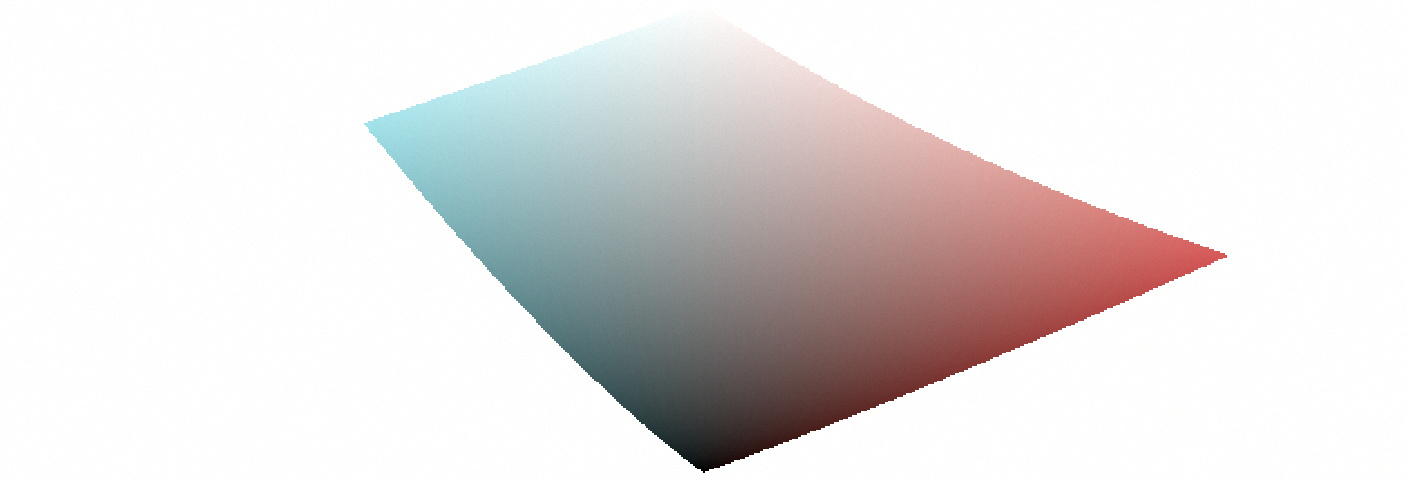

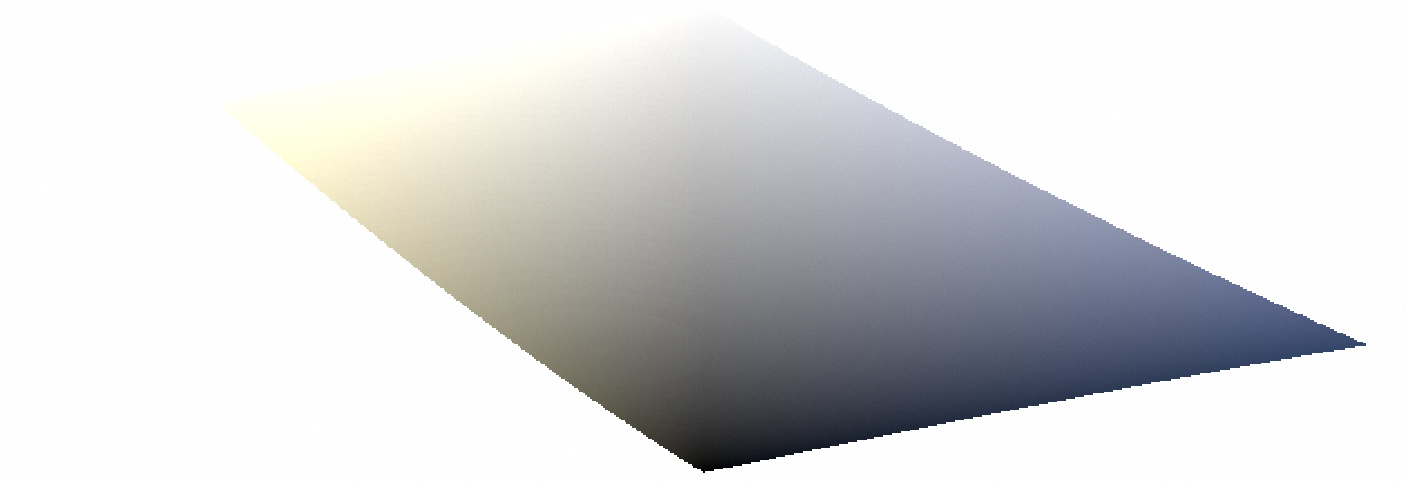

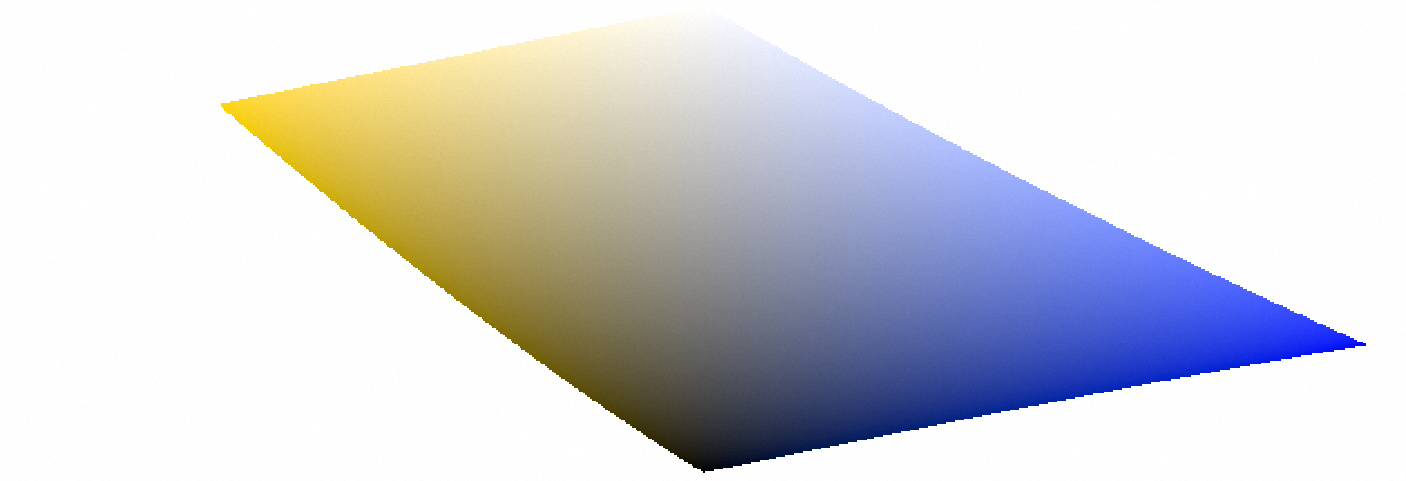

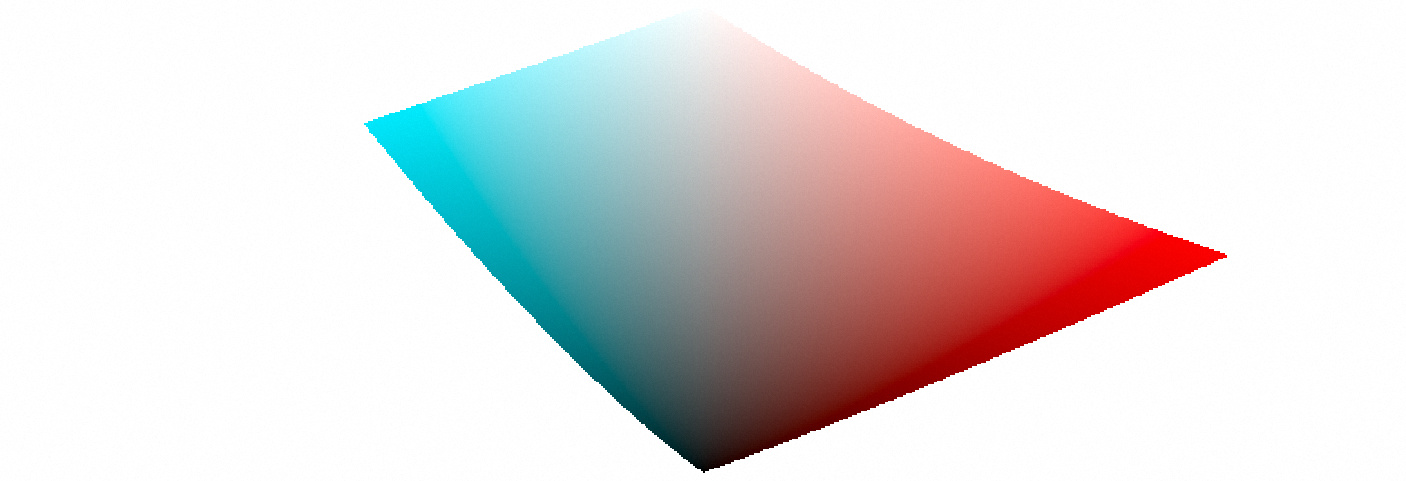

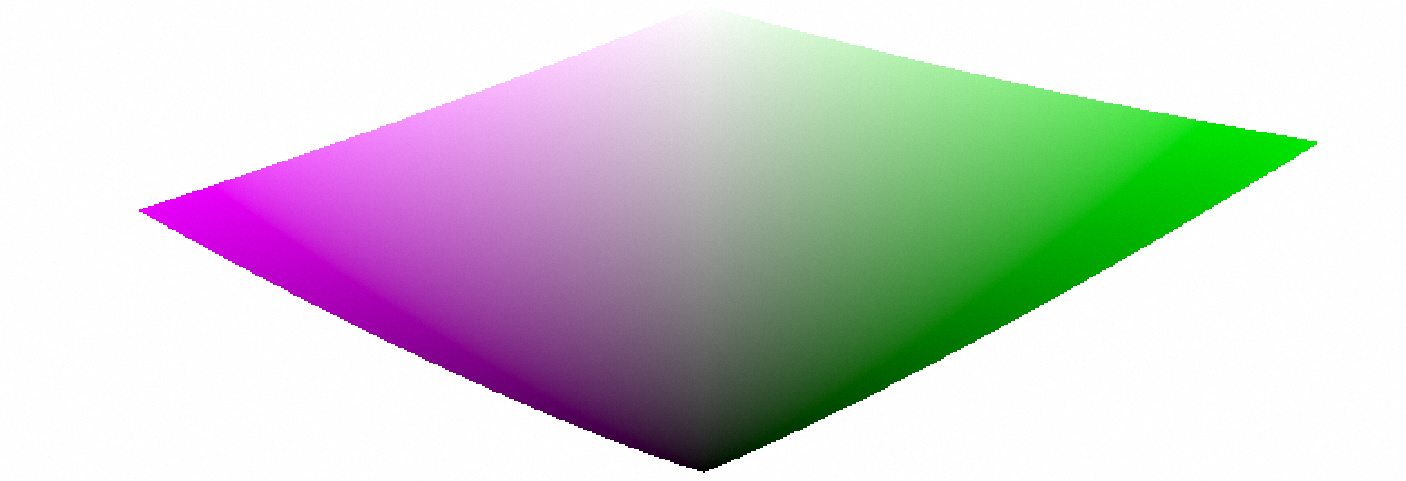

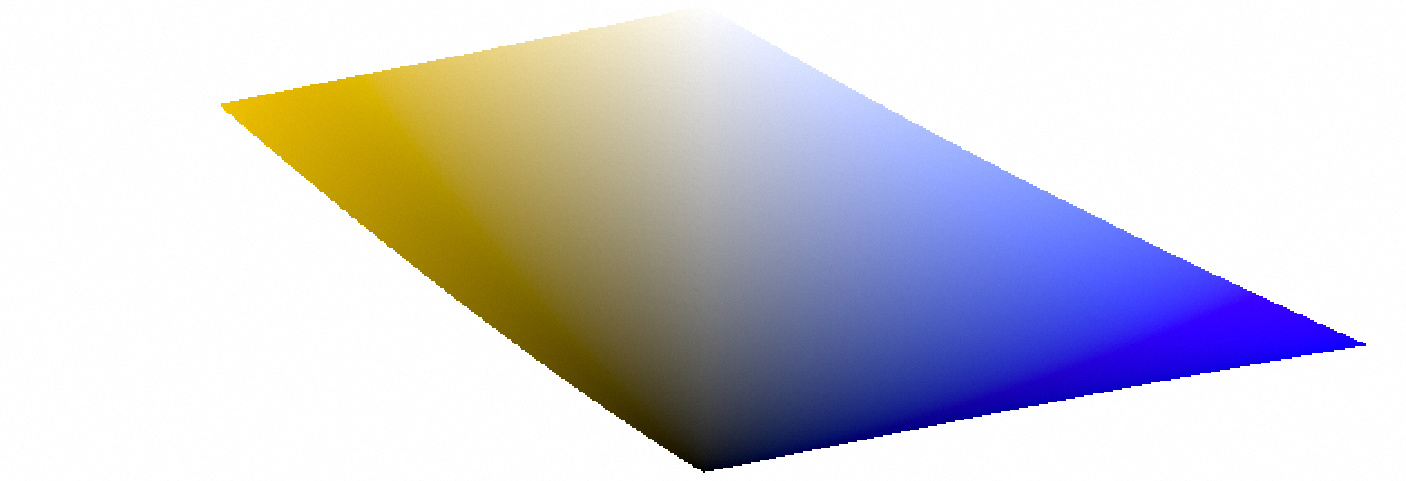

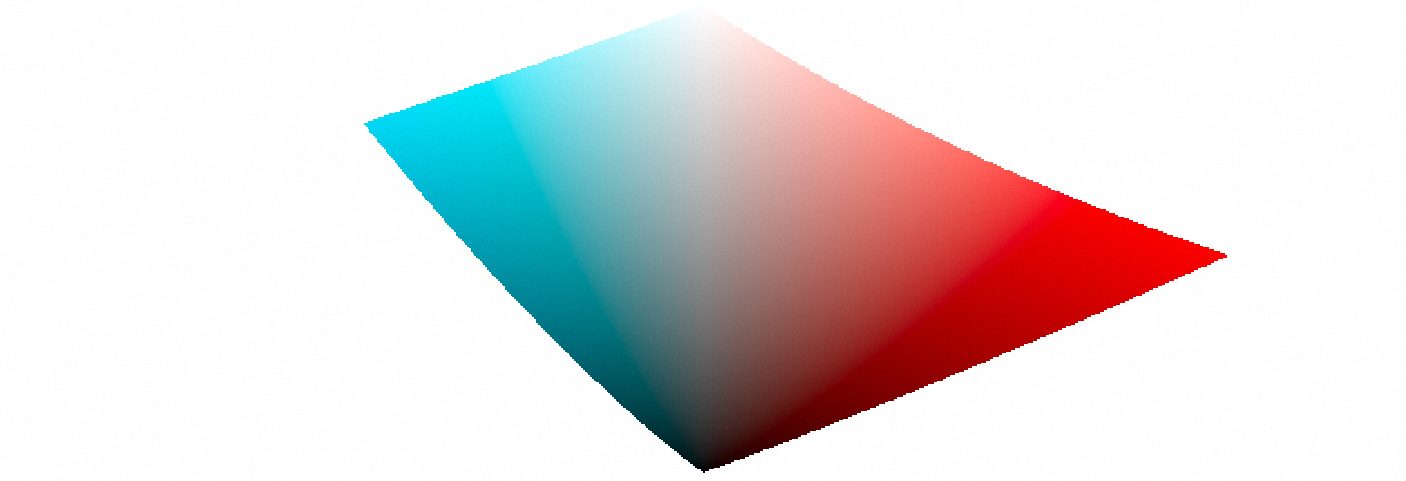

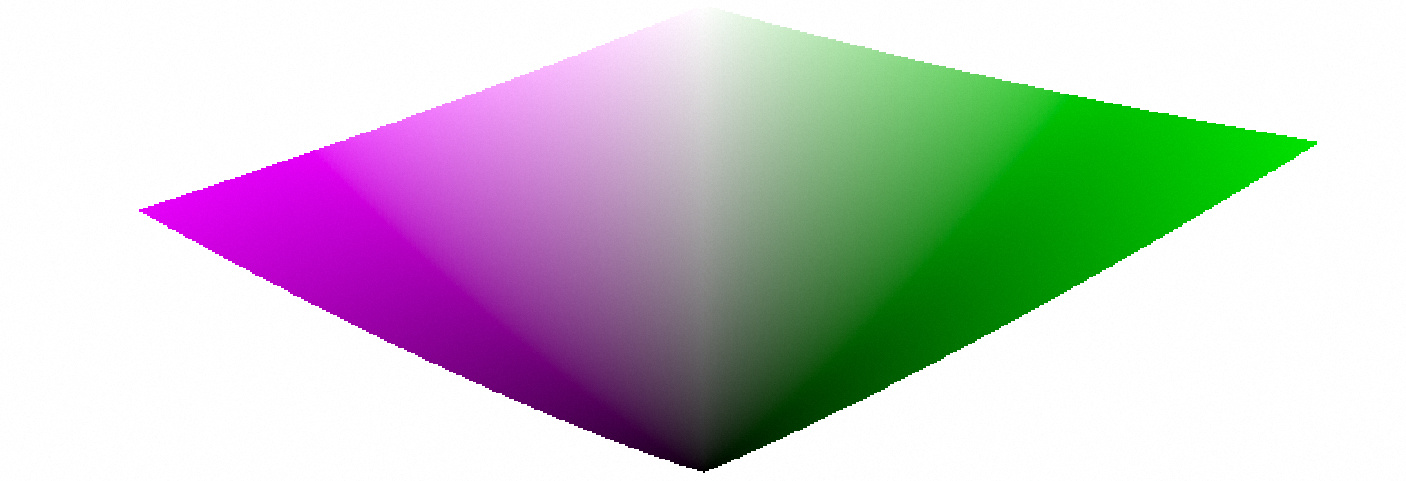

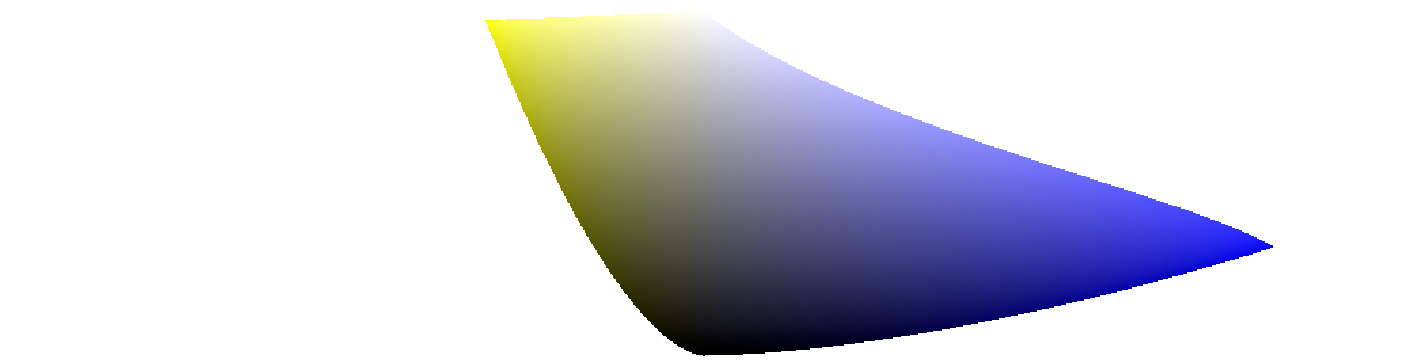

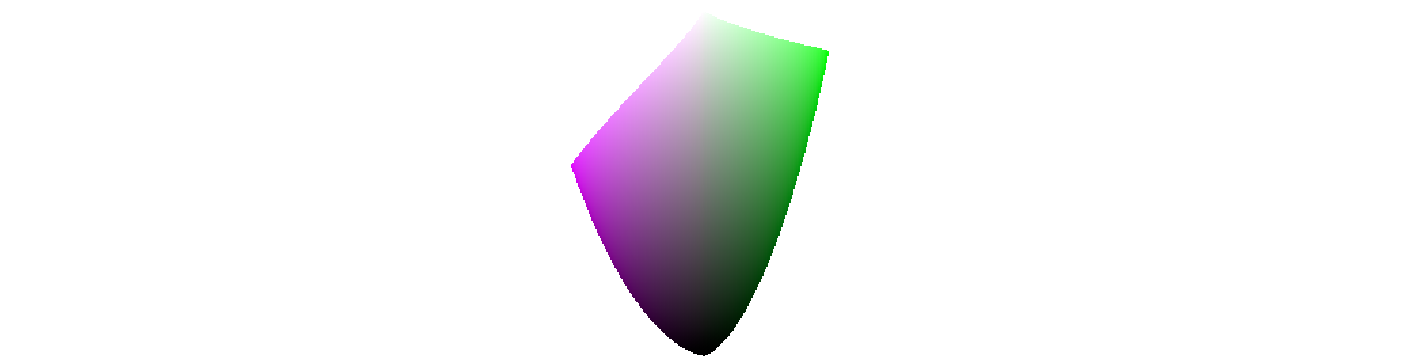

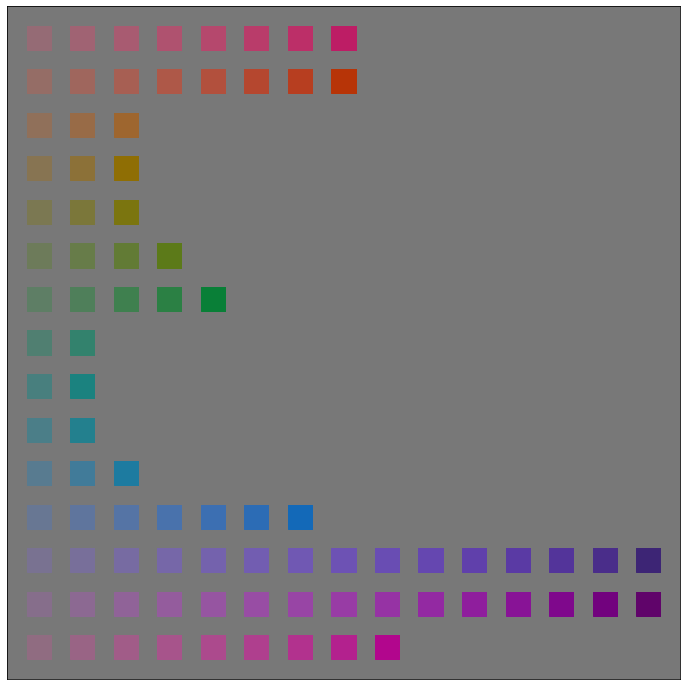

The “saturation” as defined by the HSL space has been proven times and times again to hold no perceptual meaning and finds its origin into the first GUI of limited computers doing integer arithmetic. We can show here another illustration as to why HSL/HSV are meaningless to computer graphics. The following gradients where generated at constant CIE 1931 luminance $Y = 0.4075$ and at constant chroma. For each color model, the chroma has been empirically adjusted to the maximum value that produces a gradient fully contained within sRGB gamut (without clipping). For the HSL/HSV models, since luminance and chroma are not defined, S and L/V have been roughly adjusted visually to match the perceptual gradients.

It is immediately noticeable that HSL and HSV do not degrade at constant lightness, nor provide constant chroma or “saturation”. Also, the green range spans too wide on the gradient, compared to other hues, while yellow only has a thin line, and cyan is also compressed. The perceptual hue gradients display no yellow, because yellow is only found at higher luminances and should not appear here. HSL/HSV should display olive-green and light brown in place of yellow, that is, “dark yellow”.

The model parameters used are :

- HSL : $S = 0.5$, $V = 0.7$,

- HSV : $S = 0.4$, $L = 0.9$,

- CIE Lab 19761 : $L = 70 / 100$, $c = 38 / 100$,

- IPT 20132 : $I = 0.676$, $c = 0.22$,

- JzAzBz 20173 : $Jz = 0.01022$, $c = 0.48$,

- Oklab 2020-20214 : $I = 0.7438$, $c = 0.12$

- darktable UCS 22 (developed below) : $L = 0.71$, $c = 0.36$

- Yrg 20195 : $Y = 0.4311$, $c = 0.085$.

It is worth noting that Kirk Yrg 2019 is a scene-linear (radiometrically-scaled) space designed for color-grading. The Ych/Yrg rewriting is meant to have an uniform hue repartition around the white point, but has been designed for the sole purpose of having well-scaled GUI controls directly translatable into RGB parameters for algorithms running in scene-referred RGB. It uses the CIE 2006 definition of Y, such that $Y_{CIE \, 1931} = 0.9453 Y_{CIE \, 2006}$.5 It is therefore a kind of Yuv space, while CIE Lab, JzAzBz, IPT and Oklab are perceptually-uniform (non-radiometrically-scaled) spaces.

#What is saturation anyway ?

Saturation is a word that has been abused by decades of bad computer graphics, mixing its scientific meaning with its common usage. It is useful here to reboot the CIE definitions:

CHROMA: colourfulness of an area judged as a proportion of the brightness of a similarly illuminated area that appears grey, white or highly transmitting.6

SATURATION: colourfulness of an area judged in proportion to its brightness.7

BRIGHTNESS : attribute of a visual perception according to which an area appears to emit, transmit or reflect, more or less light. 8

COLORFULNESS : attribute of a visual perception according to which the perceived colour of an area appears to be more or less chromatic. 9

So, if we untangle this we get that:

- chroma is relative to the grey colour having the same brightness,

- saturation is relative to its own brightness.

This yields two different representations of colour :

- a Lightness, Chroma, Hue system,

- a Brightness, Saturation, Hue system.

An useful, graphical, translation between both systems can be found on David Briggs’s website10, we reproduce it here :

Translated into computer graphics terms, the lightness is a perceptual rescaling of the radiometric luminance (CIE Y component). For this scaling, Oklab uses a simple cubic root, CIE Lab 1976 uses a cubic root with a noise threshold, and other models may use the Dolby Perceptual Quantizer (JzAzBz) or a Michaelis-Menten analog, but the principle of a simple cubic root holds : it raises mid-tones and connects smoothly with medium black and white which it leaves unchanged. The lightness axis goes through the grey gradient. The chroma is orthogonal to the lightness. All colours share the same lightness axis.

Saturation, however, is not an unique axis. Each colour has its equi-saturation axis going through it from the black point. Conversely, the equi-brightness axis is also a propriety of each color, oriented in a parallel direction to the white → primary color.

An important consequence is that desaturating should turn red into pink then into white. This is consistent to what happens when diluting pigments into white paint, or mixing a colored light with white light, if we forget the Abney effect.

This is not what computer graphics “saturation” algorithms do. Below are the sRGB gamut slices at constant hue, for the hues of the Rec709 primaries, from my previous post, the sRGB book of color (top line). See what happens to them when we reduce their chroma by 50% in Kirk Yrg (middle line) and then by 75% (bottom line) :

This effectively degrades colours into a monochrome image where the grey shade is defined by the original luminance of the pixel, because we use an Yuv kind of space. Of course, “desaturation” based on HSL, HSV, or RGB averaging will have another effect, but it should be fairly close visually.

Problem is, outside of computer graphics, the result of a desaturation is expected to be a paler colour. That is less colourful and lighter. Effectively, this falls back to turning saturated colours into their pastel equivalents, and pastel colours into their primary equivalent. This cannot be done with the typical chroma-based “desaturation”, but needs a real saturation change, as defined by the CIE.

As a side-note, film emulsions can perfectly degrade saturated colors to light, pastel colors by a bleaching effect. It is about time that the digital world catches up on film to suppress this insane film nostalgia based on an alleged intrinsic property of film while the truth is film sensitometry was carefully crafted by people who conducted extensive colour research before.

#Choosing a colour space

Chroma is a perceptual dimension of colour, but it can still be dealt with either in radiometrically-scaled spaces (Yuv, Ych) or in perceptually-uniform spaces (Lab, Lch). However, brightness is linked to both lightness and chroma, and for proper scaling of the effect along both directions, a perceptually uniform color space is the obvious choice. However, we need to stop and discuss what perceptually uniform means, because it is casted like a magic spell by too many software developers.

Colour spaces like CIE Lab 1976, IPT, JzAzBz etc. aim at having euclidean distances uniformly spaced. Euclidean distances measure the delta E, that is the perceptual difference between 2 colors, in order to control, predict and possibly reduce the colour dissimilarity between a reference and a test image or a reference and a test colour. Such spaces are not designed to modify colour but to provide a metrology framework in which a simple 3D euclidean distance has a cognitive meaning.

Colour spaces like CIECAM 02 and 16, Hunt and Nayatani, aim at being colour adaptation models, meaning they describe the appearance of colours with respect to the viewing conditions (illuminant colour, surround lighting, etc.) and provide models to adapt the colours, that is to retain the same appearance while the viewing conditions change. But here, Hunt and CIECAM models where designed for image reproduction (that is, reflective surfaces) while Nayatani was designed for lighting engineering (that is, emissive objects).

Not many models are aimed at allowing colour manipulation in a perceptually-defined way. Such models exhibit an even hue repartition over the chromaticity diagram, as well as straight equi-hue lines (unlike Abney lines) and circular equi-chroma. But when we take into account the limitations of colour adaptation models (bounded white luminance, viewing conditions, etc.), the choice gets scarce.

There are several perceptually uniform colour spaces that explicitly include the support of saturation and brightness based on observer data :

- the Hunt model, built between 1952 and 1995 by a Kodak researcher, Robert William Gainer Hunt,

- the Nayatani et al. model, built 1981 and 1995,

- the CIECAM02 and its second version, CIECAM16.

But they have several problems :

- the Hunt model is not invertible, so while we would be able to compute the saturation and let the user change it, we would be unable to bring back the result into the RGB realm,

- The Nayatani model is complicated, using many transcendent functions sequentially (log and power),

- CIECAM16 has a numerically unstable behaviour, with a crooked gamut shape,

- All of them rely on bounded luminance, unsuitable for HDR signals.

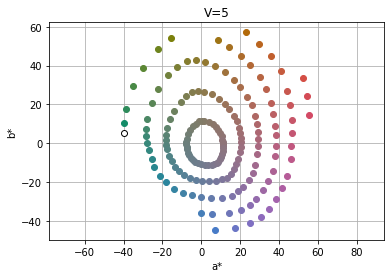

The colour space review coming with the designing of Oklab4 is useful to visualize the fitness of colour manipulation by looking at the shape of the visible gamut and the repartition of Munsell hues (graphs taken from Ottosson):

The spaces exhibiting a circular repartition of chroma are the best suited for this work, that is Oklab and CIECAM16. Indeed, if we are to digitally rotate hues around white, the rotated colours will not end up at the same perceived chroma as the original colours in spaces that have ellipsoidal chroma rings. Conversely, applying digitally a chroma gain may not hit all the hues with same visual intensity if the chroma rings are not circular.

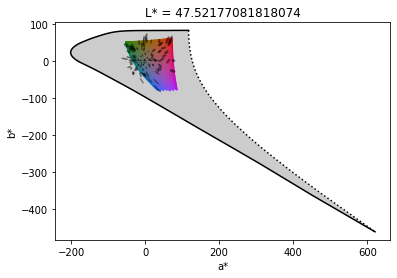

However, a look at the gamut shows that CIE CAM 16 has a highly non-convex boundary that will cause problems when pushing colors :

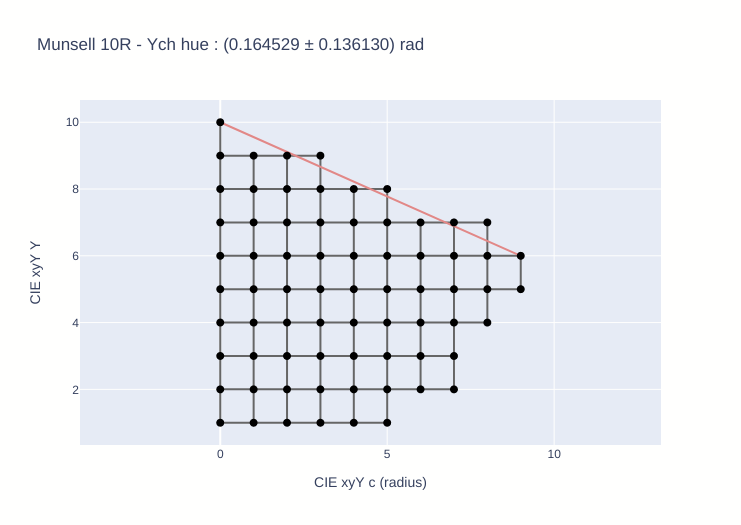

This means we will use Oklab as a starting point. It is almost as good as CIE CAM16 as far as Munsell hues go, but more simple. But since it is a Lightness/Chroma space, we will need to rewrite it as a Brightness/Saturation space and we need to assert how the constant hue planes behave. For this, we project Munsell colours on the $(L, c)$ plane in Oklab and look for the geometry of the predictions :

We plot the lines of equal lightness and equal chroma in Munsell notation system. The hues are given in the title in Munsell notation as well as in Oklab hue angle, as (average ± standard deviation) radians in $[0; 2 \pi]$. Since angles cannot be averaged directly (because $0 \equiv 2 \pi$), the Lch hue angles are converted to complex unit vectors, and the vector coordinates are averaged in the complex plane. The average angle is taken as the argument of the average complex vector, and the standard deviation is directly on the complex coordinates.

If Oklab was perfect, the mesh formed by those lines would be a perfect square grid, with orthogonal lines oriented in the same direction as the main axes. We can see that Oklab does a fairly good job at predicting Munsell values and chromas, however, the yellow-green region is really crooked in the extreme chromas. Also, the yellow region has equi-lightness lines slightly dropped, while the opposite region, purple, is has the equi-lightness lines slightly raised.

What is a bit concerning, though, is the density of the mesh is not uniform across hues. The 10PB plate (purple-blue) hat 34 Munsell chroma units spanning over 0.35 Oklab chroma units, while the 10B plate (blue) has 18 Munsell chroma units spanning over 0.32 Oklab chroma units.

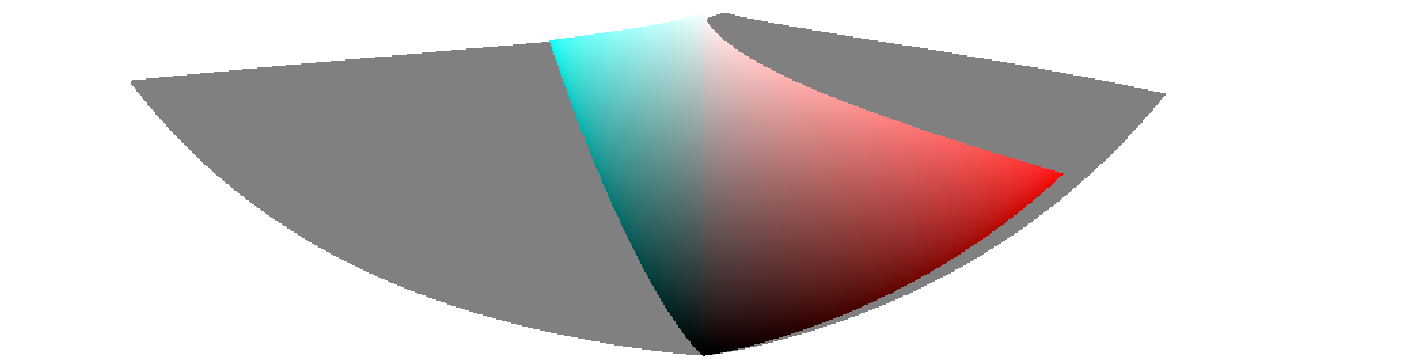

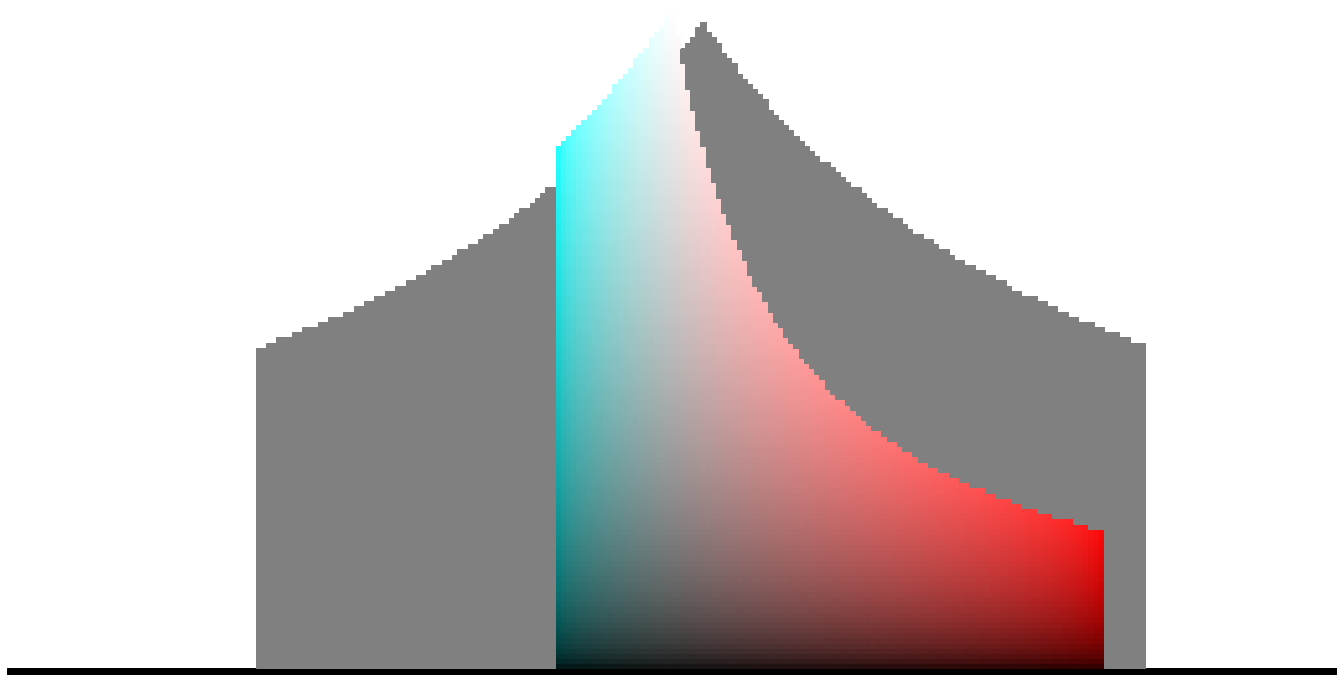

#Rewriting Oklab as Brightness-Saturation system

Oklab has the desirable property of leaving the gamut of RGB spaces shaped as a straight cone, while the hue slices of sRGB in JzAzBz showed above clearly demonstrate non-linearity at the boundary of the gamut:

The non-continuity of the blue primary slice is slightly concerning, we will have to assess later if it is a problem. Nevertheless, we can build a look-up table (LUT) of the gamut boundary as a simple linear hue-wise function, in the $(L, c)$ plane.

#Building the gamut LUT

The lower bound of the gamut is a straight line having constant saturation, that is a constant $s = \dfrac{c}{L}$ ratio. We create an RGB cube of 92×92×92 elements between $0$ and $1$, convert RGB coordinates to Oklab (L, a, b) then to Lch. The LUT is 2×1D and will store the $\arg \max(c)$ and $\arg \max(c / L)$ for each hue. A hue resolution of 1° is enough for the LUT. Then we apply anti-aliasing using a 5-taps 1D box blur.

The maximum chroma and saturation will allow us to compute the slope of the gamut boundary and the cusp for each hue. This is a reasonably fast operation to compute on-the-fly, and LUTs can be pre-computed for usual colour spaces.

#Parametrisation of the transform

Let $A$ denote the original colour in the $(L, c)$ plane of constant hue. $A$ has a saturation defined either as a ratio $s = \dfrac{c}{L}$ or as an angle $\gamma_1 = \arctan(s)$.

The user will be asked to define the saturation change by a proportional factor applied on the angle $\gamma_1$. This will define a rotation of the vector $\vec{S}$ around the black point to give the vector $\vec{S’}$, such that $||\vec{S’}|| = ||\vec{S}|| = \sqrt{L^2 + c^2}$ and $\arg(\vec{S’}) = k_1 \arg(\vec{S}) = k_1 \gamma_1 = \kappa \in [\alpha_1; \frac{\pi}{2}]$.

The fact that we know the limit of the gamut boundary and that we apply a transform which nicely falls back to a rotation of this boundary means that we can have built-in gamut sanitization, and saturation clipping yields a graceful gamut clipping that preserves gradients of lightness and chroma at constant hue.

Once the vector $\vec{S}$ has been rotated by an angle $\kappa - \gamma_1$, we need to rescale it to reach the line of equi-brightness. This reaches the new colour $A’’$ ported by the vector $\vec{S’’}$. The user will then be asked to define a brightness change that will proportionally scale the modulus of $\vec{S’’}$.

The equi-brightness lines are all parallel to the white → gamut cusp line, forming an angle $\delta$ with the horizontal. From the maximum saturation and chroma, we can compute the $(L, c)$ coordinates of the cusp :

$$ \begin{align} c_{cusp} &= \arg,\max(c)_h\\ L_{cusp} &= \dfrac{c_{cusp}}{\arg \max(s)_h}\\ \end{align} $$

Oklab can be scaled4. This means that, in HDR settings, when the white point exceeds 100 Cd/m², it suffices to normalize the XYZ coordinates by the Y of the white, then to convert to Oklab. White can thus be expected at 100%, which makes it easy to compute the slope of the equi-brightness line:

$$ \delta = \arctan\left(\dfrac{1 - L_{cusp}}{c_{cusp}}\right) $$

We now get a triangle $A A’’ O$ where $O$ denotes the origin of the axes and the black point. By the property of internal alternate angles between 2 parallel lines, $\gamma_1 = \gamma_2$ and $\widehat{A’’ A O} = \gamma_1 + \delta$.

Since the $A A’’ O$ triangle is scalene, we will have to resort to the law of sines :

$$ \dfrac{\sin(\delta + \gamma_1)}{||\vec{S’’}||} = \dfrac{\sin(\kappa - \gamma_1)}{||\vec{AA’’}||} = \dfrac{\sin(\pi - (\delta + \gamma_1) - (\kappa -\gamma_1))}{||\vec{S}||} $$

From which we deduce:

$$ ||\vec{S’’}|| = ||\vec{S}|| \dfrac{\sin(\delta + \gamma_1)}{\sin(\pi - \delta - \kappa)} $$

On this vector, the brightness scaling can be applied from the user parameter : $||\vec{S’’’}|| = k_2 × ||\vec{S’’}||$.

Converting back to Lch is easy:

$$ \begin{align} \kappa &= k_1 × \gamma_1 \in [\arg \max(s)_h; \pi / 2]\\ L &= ||\vec{S’’’}|| \sin(\kappa)\\ c &= ||\vec{S’’’}|| \cos(\kappa)\\ \end{align} $$

where the angle $\kappa$ is clipped to fit in the gamut by $[\arg \max(s)_h; \pi / 2]$.

#Results

#Synthetic

The experiments are done in darktable, using unbounded RGB on the 8 bits PNG files from the post sRGB book of color. The working gamut is Rec2020 and so the gamut boundary as well as the equi-brightness lines are computed against it. The upper gamut cone is not sanitized. The file is then encoded back to sRGB.

The bottom cone holds well even for insane saturation increases that are guaranteed to exceed the sRGB gamut. On the other hand, the upper cone clips at non-constant hue for heavy desaturation, which may be explained by the fact that the sRGB gamut has a curved upper cone while the desaturation moves in a rectilinear direction. This should be handled when converting back to bounded RGB.

One issue we observe here is how yellow degrade to a muddy variant as saturation rises, while we would expect it to degrade to something close to cadmium yellow, in the spirit. It may be consistent with the oddities displayed in the mesh of Munsell colours for the 10Y (yellow) plate, where the extreme chroma values are not only compressed, compared to the greyer shades, but also the lines of equi-lightness are slanted.

#Natural

The next result comes from a picture made myself in studio, under high-CRI D55 lighting with a profiled camera. The starting material is the already-edited JPEG. We use the color balance RGB module from darktable to shift saturation in Oklab and apply the same settings on chroma in Kirk Yrg :

The saturation approach allows effectively to bleach the highlights to get the beloved desaturation coming with most film emulsions, while increasing the density of colours in shadows. The result reinforces both the colour and the lightness contrast. This effect is customarily obtained by applying a channel-wise sigmoidal contrast-increasing curve in RGB, with two major drawbacks : the hue is not preserved through a channel-wise non-linear operator, and the (de)saturation is a by-product of the contrast increase and not an orthogonal, individual parameter. As the amount of desaturation cannot be separated from the amount of contrast increase, and the resulting desaturation cannot be correlated to any perceptual color property, the result is unpredictable and that makes for a very bad, random, workflow.

The chroma approach, working at constant luminance, makes the shadows degrade to fluorescent colours and yields a less natural result.

#Choosing the gamut

I want to be very clear on the fact that all we have done so far is to change the direction in which the saturation/desaturation pushes colours. This is geometric hocus-pocus and has nothing to do with perception, it does not take into account the greyness boundary (linked to Evans & Swenholt’s notion of fluorence11) nor the Helmholtz-Kohlrausch effect. Yet it already yields more pleasing results than the pure chroma approach and meets the pastel design goal, which is already a success for such a simple trigonometric trick.

The gamut against which we computed the equi-brightness direction has been set to darktable’s default working RGB space : Rec2020 (linear). Using Rec709 makes the effect stronger and the gamut clip at lower saturation values. In any case, it would be interesting to evaluate if we can use directions independent from the RGB working space, but linked to human vision. That is, fitting the $\delta(hue)$ angle to some perceptual reality and make it independent from the RGB spaces heuristics.

#Building a new perceptual color space for color correction

After trying to fix Oklab for a dozen of hours, it appeared that the numerical issues it raises are grounded into design constraints we don’t need for the current task. And so do most of the other perceptual spaces.

First of all, Oklab is a retro-fit of CIE CAM16 using a simpler model, using CIE CAM16 predictions as a training set, and trying to approximate them by a model retaining the algorithmic simplicity of CIE Lab. It will therefore inherit the flaws from both approaches.

These perceptual spaces are designed to either compute a delta E or to adapt the appearance of an image as the viewing conditions change, that is retain the perception and translate it into a different viewing context. For this, they rely on an opponent color space : a/b or P/T depending on models. To go this opponent space, they convert to cone response space (LMS), then apply a non-linear transform in 3D. Because this non-linear transform relies on an additive light model and usually does not tolerate negative input (CIE Lab as Oklab use a cubic root), the cone response space has to be fitted with two unsolvable constraints :

- being hue-linear,

- yielding positive cone intensities for all visible colors.

The numerical trade-offs this creates are very damaging for both the physiological accuracy of the model and the numerical accuracy of the fitting to experimental datasets.

Also, this 3D mapping is tilting the perceptual lightness plane, as we saw above for Oklab. In any case, none of them accounts for brightness including the compensation for Helmholtz-Kohlrausch effect.

If we review the need for an opponent space for our application here, we see that it is merely used to compute the chroma (as a metric of the distance to achromatic) as $c = \sqrt{a^2 + b^2}$ and the hue as $h = \arctan(b / a)$. While we need an uniform delta E over the perceptual space, we don’t need to compute or express it. Fortunately, the Munsell dataset provides us directly with hues and chromas, and does not bother with opponents colour dimensions because artists don’t ever need them.

We want a proper scaling of perceptual correlates like brightness and saturation for the colour change effect to be evenly scaled over the visible range.

So we could fit an Lch model directly from Munsell hue-value-chroma dataset, without going through LMS space and without even tilting the lightness plane. Doing so, we will not try to model the physiology of vision, but approach the problem as a geometric space distortion where the Munsell hues and the saturations (as a ratio of chromas / values) can be predicted by a 1D mapping from the hues, chromas and lightnesses of forming the principal dimensions of the model.

This model will need to be invertible. We will try to fit brightness data to derivate correlates of perceptual brightness accounting for the Helmholtz-Kohlrausch effect. Using the brightness and saturation correlates, we will rewrite our image saturation algorithm in terms of perceptually-even operators.

#Fitting Munsell Value with lightness

#Lightness model

Various models have been proposed over the years to fit the lightness correlate from Y luminance, and many different equations can be fitted with very good accuracy on the data because the range is quite small. I propose a generalized Michaelis-Menten equation, as used in IPT and CIE CAM97, 02 and 16 do, because of its link with cone response.12

It is worth mentioning, though, that the Y luminance is not directly a cone response and CIE CAM 97 to 16 use an achromatic $A$ stimulus here, derivated from a linear combination of the physiological cone response. In other words, there is more to lightness than just CIE Y luminance, and we choose here to ignore that fact for the sake of hue-linearity and to avoid going through an intermediate LMS space.

We form the a-priori model:

$$L^* = V_{Munsell} = a \dfrac{Y^c}{b^c + Y^c}$$

The choice of $a$ is critical for the invertibility properties as it defines the upper lightness bound (saturation level) of the model. Indeed, the inverse model yields:

$$Y = \left(\dfrac{b^c L^*}{a - L^*}\right)^{1 / c}$$

where it becomes obvious that the lightness model will be valid only for lightness levels verifying $a - L^* > 0$. The saturation level is to be chosen carefully as it is the practical bound of the model. We know that the static dynamic range of the human retina cone cells is expected around 6 EV12. However, the adaptation to luminosity is a local and temporal scheme13 where the $b^c$ half-saturation parameter is adapted dynamically through time and space, such that the resulting dynamic range can be quadrupled. This is more likely the result of pupil dilatation, that acts like a physiological exposure bracketing and it will be very difficult to include in a colour appearance model, so I need to discard it.

CIE CAM1614 uses the cone adaptation equation :

$$f(x) = sign(x)\frac{400(F_L |x| / 100)^{0.42}}{27.13 + (F_L|x| / 100)^{0.42}} + 0.1$$

From which we can deduce that $b^c / a = 27.13 / 400$ and $b / a = 27.13^{1 / 0.42} / 400 = 6.47$. I will therefore constrain the $b/a$ ratio to 6.5 while refitting the equation for $(a, c)$. This yields :

$$L^* = V_{Munsell} = 2.64385176 \dfrac{Y^{0.57145904}}{1.67207394022 + Y^{0.57145904}}\label{L-eqn}$$

The reverse model yields :

$$Y = \left(1.67207394022 \dfrac{L^*}{2.64385176 - L^*}\right)^\frac{1}{0.57145904}$$

Which clearly shows that it is defined for $L^* < 2.645$. In practice, a lightness $L^*$ of 2.64 maps to a relative luminance $Y_r = 145852$, that is 145852 times brighter than perfect diffuse white or 12 EV above, so the lightness bound should not be an issue for practical use.

#Comparison with CIE CAM16

A paper yet to be published15, by Hellwig and Fairchild, aims at simplifying the CIE CAM16 correlates by reviewing their interdependency and assumptions. The simplified achromatic response becomes :14

$$A = 2 R_a’ + G_a’ + 0.05 B_a’ - 0.305$$

$$f(x) = sign(x)\frac{400(F_L |x| / 100)^{0.42}}{27.13 + (F_L|x| / 100)^{0.42}} + 0.1$$

It is noted that the function, however, is unstable near zero.14 It also never reaches zero, which would be a problem for color-grading applications, since the black end of the working RGB spaces gamut could be assigned a non-zero saturation, resulting most likely in out-of-gamut code values.

$R’$, $G’$, $B’$ are the linear cone responses in Hunt-Pointer-Estevez cone space obtained from CIE XYZ 1931 coordinates by a matrix dot product :

$$ \begin{bmatrix} R’ \\ G’ \\ B’ \\ \end{bmatrix} = \begin{bmatrix} 0.38971 & 0.68898 & -0.07868 \\ -0.22981 & 1.18340 & 0.04641 \\ 0.00000 & 0.00000 & 1.00000 \\ \end{bmatrix} \begin{bmatrix} X_c \\ Y_c \\ Z_c \end{bmatrix} $$

$X_c$, $Y_c$ and $Z_c$ are the adapted CIE XYZ 1931 coordinates for the CIE E illuminant, which is defined as the equi-energy illuminant ($X = Y = Z$ or $x = y = 1/3$ in CIE xyY 1931 space).

The darktable UCS is already non-linearily adapted but only on the CIE Y 1931 component. We can here compare both transfer functions, with the CIE CAM16 cone adaptation function normalized by 140 to reach 1 in 1 :

$R_a’$, $G_a’$ and $B_a’$ are the non-linear cone responses, adapted from the $R’$, $G’$, $B’$ using the generalized Michaelis-Menten function:14

$$f(x) = sign(x)\frac{400(F_L |x| / 100)^{0.42}}{27.13 + (F_L |x| / 100)^{0.42}} + 0.1$$

It is noted that the function, however, is unstable near zero.14 It also never reaches zero, which would be a problem for color-grading applications, since the black end of the working RGB spaces gamut could be assigned a non-zero saturation, resulting most likely in out-of-gamut code values.

$R’$, $G’$, $B’$ are the linear cone responses in Hunt-Pointer-Estevez cone space obtained from CIE XYZ 1931 coordinates by a matrix dot product :

$$ \begin{bmatrix} R’ \\ G’ \\ B’ \end{bmatrix} = \begin{bmatrix} 0.38971 & 0.68898 & -0.07868 \\ -0.22981 & 1.18340 & 0.04641 \\ 0.00000 & 0.00000 & 1.00000 \end{bmatrix} \begin{bmatrix} X_c \\ Y_c \\ Z_c \end{bmatrix} $$

$X_c$, $Y_c$ and $Z_c$ are the adapted CIE XYZ 1931 coordinates for the CIE E illuminant, which is defined as the equi-energy illuminant ($X = Y = Z$ or $x = y = 1/3$ in CIE xyY 1931 space).

The darktable UCS is already non-linearily adapted but only on the CIE Y 1931 component. We can here compare both transfer functions, with the CIE CAM16 cone adaptation function normalized by 140 to reach roughly 1 in 1 :

To see how the darktable UCS L correlates with CIE CAM16 A achromatic response, we create a synthetic LUT of $(X, Y, Z)$ triplets in CIE XYZ 1931 2° under CIE E illuminant and project them into both spaces :

The well-known negatives created by the Hunt-Pointer-Estevez cone transform matrix appear clearly here. They make absolutely no sense from a physiological point of view: positive light stimuli are not expected to create negative perceptions. Apart from the scaling difference (I have not found the reason why CIE CAM 97 to 16 map perfect diffuse white to 400…) the curves are fairly correlated for positive values, with a larger error in low lights. Since it is difficult to infer a valid achromatic response where CIE CAM16 A yields negatives, no further attempt will be made to fit more closely the dt UCS L* on CIE CAM 16 achromatic response, but it would be numerically feasible, since the R² is 0.84.

#Fitting chromaticity

#Data

We use Munsell Renotation data from 194316, taken from the datasets of the Python computational library Colour Science 0.3.16 . We adapt them to the CIE E illuminant, equi-energy ($X = Y = Z$) through the CIE CAT16 transform, because it will prove to be the less challengin to fit. It is also the method used in CIE CAM1614.

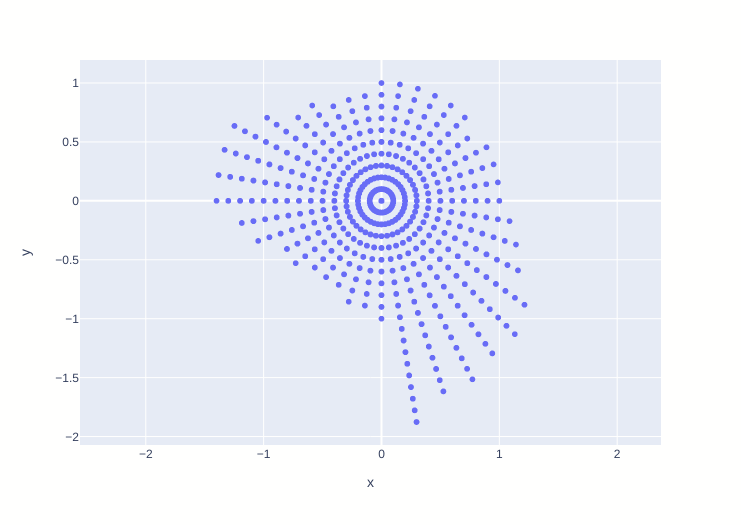

Projecting it onto the CIE xyY 1931 chromaticity plane shows the whole problem : there is no immediate correlation between perceptual properties of colour (hue and chroma) and their radiometric coordinates in normalized cone space. That is to say that colour is not merely a product of our retina.

The initial colour correlates are quite bad :

When projecting onto the orthogonal axis, the issue is essentially the same :

The chroma and the luminance spacing are not properly scaled compared to each other, and the perceptual chroma is exaggerated at lower luminances, compared to the same chroma at higher luminance.

#Objective

A perceptual space means that each of the 40 Munsell hue should have an angle of $\frac{2\pi}{40}$ with the next and each chroma should occupy a circle centred around white and separated by a radius from the next circle :

Similarly, the orthogonal plane should display a perfect rectangular mesh for the equi-chroma and equi-lightness lines :

Our goal will be to warp the chromaticity plane to get the test data spaced like our objective, using only invertible transforms. The Munsell colors are given virtual coordinates in our virtual target space, as Yuv components.

#Chromaticity model

We will re-use the formalism of MacAdam moments17 as extended by Pridmore18 for saturation and brightness. These very little-known papers (66 and 16 citations respectively in Google Scholar as of early 2022) start with a quite elegant way to predict the luminance gain necessary to obtain an achromatic colour when mixing two complementary chromaticities, and more generally predicting the result of mixing any number of chromaticities in an additive setup.

The moment of a boundary chromaticity $\lambda$ (belonging to the visible locus boundary) of cordinates $(x, y)$, relative to the achromatic locus (white reference) $(x_n, y_n)$, in CIE xyY 1931 space can be defined as:

$$m_\lambda = \frac{\sqrt{(x - x_n)^2 + (y - y_n)^2}}{y}$$

Similarly, for the complementary boundary chromaticity $\lambda_c$ of coordinates $(x_c, y_c)$ (complementary in the sense that $(x, y)$ and $(x_c, y_c)$ are colinear with the neutral achromatic locus):

$$m_{\lambda c} = \frac{\sqrt{(x_c - x_n)^2 + (y_c - y_n)^2}}{y_c}$$

Then, MacAdam predicts that the ratio between the luminances $L$ and $L_c$ necessary for $\lambda$ and $\lambda_c$ to mix into an achromatic color is :

$$b = \frac{L_c}{L} = \frac{m_\lambda}{m_{\lambda c}}$$

That is to say that the complementary chromaticity $\lambda_c$ has to be gained at a luminance of $b\cdot L$ for the color mix to yield:

$$ \begin{bmatrix} L \\ x \\ y \end{bmatrix} + \begin{bmatrix} bL \\ x_c \\ y_c \end{bmatrix} = \begin{bmatrix} L + bL \\ x_n \\ y_n \end{bmatrix} $$

Pridmore18 refines this one step further by identifying that the relative saturation is the reciprocal of the relative lightness between two complementary boundary colors :

$$b = \dfrac{m_\lambda}{m_{\lambda c}} = \dfrac{L_c}{L} = \dfrac{S}{S_c}$$

From which he derivates the colour attributes brightness and saturation for boundary colours:

$$ \begin{align} B &= L × b \\ S_{B, CIE} &= m × b^2 \end{align} $$

So I will create an ($u, v$) space where the distance to achromatic is equal to the moment $m = \frac{\sqrt{(x - x_n)^2 + (y - y_n)^2}}{y}$ and then linearly map it in 2D in order to better fit Munsell hues and chromas. Notice that the moments method works the same in any CIE radiometric space, and that any linear map preserves the euclidean distance unchanged, which is ground to improve the Munsell fitting some more, in moments space.

#Metrics

Since our ultimate goal is to manipulate saturation and because we don’t want to map the lightness axis, we will be fitting the saturation correlate $\frac{C}{L}$ against the Munsell saturation $\frac{C / 20}{V / 10}$. The Munsell values and chroma normalization factors are meant to ensure C and V are normalized between $[0;1]$. We will minimize the quadratic error over the relative chromaticities $\frac{u}{L}$ and $\frac{v}{L}$ to account for the 3rd orthogonal dimension in the 2D mapping problem and to make a 1D correlation appear between Munsell saturation and our model’s ratios of $\frac{c}{L}$.

#Optimization

The first stage is a global optimization for chromaticity coordinates $(u, v)$. We interleave linear 2D matrix products and the non-linear moment normalization, using a non-linear least-squares solver with a cost function $z = \sqrt{(u_{model} - u_{Munsell})^2 + (v_{model} - v_{Munsell})^2} / L$. The method used is the Trust Region Reflective19 as implemented in the Python numerical library Scipy .

We first convert from CIE XYZ 1931 2° adapted for illuminant CIE D65, then to xyY. The mapping from $Y$ to $L$ is done using the equation $\ref{L-eqn}$. Then, the mapping from xy to uv chromaticities is done using :

$$ \begin{bmatrix} u \\ v \end{bmatrix} = M_1 \cdot \begin{bmatrix} x - x_{D65} \\ y - y_{D65} \end{bmatrix} \\ $$

$$ M_1 = \begin{bmatrix} 2.0265488 & -1.23424972 \\ 0.51328342 & 1.36974324 \end{bmatrix} $$

Following this, we can rewrite the radial distance to achromatic as the moment. Unfortunately, using the MacAdam moment (relative to the $x$ axis), that is dividing the radial distance to achromatic by the $y$ coordinate, distorts the chromaticity in a way that cannot be perceptually fitted for uniform delta E, sending the blue region far away from the rest of similar chromas.

So we compute the moment compared to the $u$ axis, that is using the $v$ coordinate as a divider. But since the $v$ coordinates are remaped in $[-0.5, 0.52]$, we need to find an offset of $v$ against which evaluating the moments to reduce the delta E error. The best fit is found for $v = -0.85937617$ :

$$ \begin{align} \theta &= \arctan\left(\frac{v}{u}\right)\\ u’ &= \frac{\sqrt{u^2 + v^2}}{v + 0.85937617} \cos(\theta)\\ v’ &= \frac{\sqrt{u^2 + v^2}}{v + 0.85937617} \sin(\theta) \end{align} $$

This is shown only for completeness, but since $\cos(\theta) = \frac{u}{\sqrt{u^2 + v^2}}$, we can spare 3 evaluations of transcendent functions and simplify:

$$ \begin{align} u’ &= \frac{u}{v + 0.85937617}\\ v’ &= \frac{v}{v + 0.85937617} \end{align} $$

We then apply a second matrix product to go to UV space :

$$ \begin{bmatrix} U \\ V \end{bmatrix} = M_2 \cdot \begin{bmatrix} u’ \\ v’\end{bmatrix} $$

$$ M_2= \begin{bmatrix} 0.95322837 & -0.15730312 \\ 0.05496097 & 1.04744158 \end{bmatrix} $$

The correlates look good enough:

[gallery columns=“1” link=“file” size=“full” ids=“1417,1418,1420,1419,1421”]

Since we only do 2D vector algebra, the 3 steps above can be simplified into a single rational function that will evaluate faster. The following result is for CIE D65 only (as is the UCS space) :

$$ \begin{align} D &= 0.51328342 \cdot x + 1.36974324 \cdot y + 0.248226918606 \\ U &= \dfrac{1.85102272593919 \cdot x - 1.39198673401947 \cdot y - 0.120851170908779}{D} \\ V &= \dfrac{0.64901548423294 \cdot x + 1.36689046166649 \cdot y - 0.652654103807916}{D} \end{align} $$

The reverse model will be less nice. The first option is to simply use invert the precedent equations :

$$ \begin{align} D &= 0.0545736844628483 \cdot U - 0.946511393183473 \cdot V + 1.0 \\ x &= \frac{0.359179775014519 \cdot U + 0.0524217359106796 \cdot V + 0.3127}{D} \\ y &= \frac{-0.144485240055261 \cdot U + 0.15188423099269 \cdot V + 0.329}{D} \end{align} $$

The second option is to undo the slow track step by step. The first step is to multiply $[U, V]$ by $M_2^{-1}$ :

$$ \begin{bmatrix} u’ \\ v’ \end{bmatrix} = M_2^{-1} \cdot \begin{bmatrix} U \\ V \end{bmatrix} $$ $$ M_2^{-1} = \begin{bmatrix} 1.04006072 & 0.15619467 \\ -0.05457368 & 0.94651139 \end{bmatrix} $$

Then, the reverse moment has 2 solutions because we have to solve a rational polynomial of the second order, which means we have 2 roots and we need to choose the one in the proper quadrant. A key property to solve the reverse model is the fact that moments preserve the angle around achromatic, such that $\arctan2(v, u) = \arctan2(v’, u’)$ therefore $\frac{v}{u} = \frac{v’}{u’}$. Unfortunately, $\frac{v’}{u’} = \frac{-v’}{-u’}$ so we have to check $S_1$ and $S_2$ and choose the one that has the same sign as the non-zero coordinate of the input $(u’, v’)$ :

$$ \begin{cases} S_1 &= \begin{bmatrix} u_1 \\ v_1 \end{bmatrix} = -0.85937617 \cdot \begin{bmatrix} u’ / (v’ - 1) \\ v’ / (v’ - 1) \end{bmatrix} \\ S_2 &= \begin{bmatrix} u_2 \\ v_2 \end{bmatrix} = -0.85937617 \cdot \begin{bmatrix} u’ / (v’ + 1) \\ v’ / (v’ + 1) \end{bmatrix} \end{cases} $$

Finally, multiply by the inverse matrix $M_1^{-1}$ :

$$ \begin{bmatrix} x - x_{D65} \\ y - y_{D65} \end{bmatrix} = M_1^{-1} \cdot \begin{bmatrix} u \\ v \end{bmatrix} $$ $$ M_1^{-1} = \begin{bmatrix} 0.40175829 & 0.36201679 \\ -0.15055075 & 0.5944054 \end{bmatrix} $$

#HDR support

To understand how HDR may affect our model, we need to review the current imaging pipeline design and perform a thought experiment.

In the scene-referred workflow that arose from the needs of alpha compositing (occlusion modelling) and dynamic-range-agnostic workflow, the general goal is to process the radiometrically-scaled image (scene-linear). Indeed, applying image transforms that break the radiometric relation also introduce artifacts in later convolutional filters (blurs) and alpha compositing (opacity/transparency of stacked layers). Therefore, it is desirable to keep the image data radiometrically-scaled for as long as possible along the editing pipeline.

However, as of 2022, most “graphical art” editing displays are still SDR, with a contrast ratio of maximum 1000:1 and a white intensity of maximum 350 Cd/m². Also, photography is still very much grounded in the paper world, where contrast ratios are much lower. So, there is a need for a non-linear view transform that maps possibly HDR imaging pipelines to SDR display. A such transform has been detailed in the 2018 article Filmic, darktable and the quest of the HDR tonemapping. This view transform is to be applied as late as possible in the pipeline.

But this means that the data been manipulated in the image processing pipeline are not directly the data being rendered on the screen. So we need operators affecting pipeline colours in a way that is predictable in terms of results as seen on the screen. Namely, the pipeline white luminance may be 2 to 4 times higher than the display white luminance. This will affect the perceptual attributes of colour, because both lightness and chroma (as per the CIE definition of chroma, which is different from the Munsell chroma, called colorfulness in the CIE referential). This separation between the HDR model, from the inner pipeline, and the SDR view, from the pipeline output, is still one of the most challenging concepts to grasp for image software developers and users alike.

How does an HDR pipeline relate to Munsell colours upon which we have fitted the darktable UCS 22 ?

We could imagine a first Munsell sweep (like a page of the Munsell book of colours), made of reflective material, lit by some light source. If we had a perfect diffuser attached to the sweep (100 % white), it would have a luminance of 100 Cd/m². The implicit part of the Munsell values is they are all defined by comparison with the value of the pure white. Since this value is normalized to unit, the operation is silent and does not appear.

Now, we put another Munsell sweep in the same visual field, but lit with a light source 4 times as bright as the first one (+2 EV). This will shift the reference of white in our visual field and raise it by 2 EV, so all the colours from the first sweep will be redefined in our perception by comparison with the new white reference. Noticeably, the lightness (Munsell grey patches) scale of the first sweep gets compressed non-linearly, and so do its chroma. In other words, the comparison to pure white is not silently normalized by 1 as far as the first sweep is concerned.

In darktable’s pipeline, we work in a scene-referred workflow where users are expected to normalize linearly the RGB code values such that the reflective middle-grey (20 %) is anchored at 0.20 and the perfect reflective white (100 %) at 1. Values above 1 are the realm of specular highlights, emissive surfaces or reflective elements lit by a secondary light. When the whole image is made of reflective materials lit at different intensities, the diffuse white reference is the one relative to the lighting of the region of interest, which will be the subject of the picture in most cases. For example, for a picture of a subject in a backlit situation, we consider the reflective range $[0;1]$ relatively to the bounce light lighting the side of the subject exposed to the photographer’s lens.

All this means that the perceptual colour attributes will need to be adapted for the scene SDR white. Adaptation involves defining a reference to which adapt the test samples. As the vast majority of screens are still working in SDR and as our UCS is fitted on Munsell reflective colours, we will normalize the colour attributes to the realm of Munsell colours : the reflective range $[0; 1]$ where 1 is assumed to be the relative luminance of the perfect white diffuser.

This means that we will have to backport some of the adapting properties of CIE CAM 16 and translate them.

#Colour attributes

We assume an average viewing background, meaning a 20 % middle grey compared to the perfect diffuser luminance. This will allow us to simplify the CIE CAM 16 colour attributes and is the recommended background in darktable as of v3.0 and above, and also meets the ISO standard for pre-press (REFERENCE).

#Lightness-Chroma model

The lightness-chroma model is the easiest to derivate from Munsell colours. We will introduce the adapting values by analogy with CIE CAM16.

#Lightness

The simplified CIE CAM1615 defines the lightness $J$ as follow:

$$J = \left(\frac{A}{A_{white}}\right)^{cz}$$

where $A$ is the achromatic response, and $cz$ accounts for the Stevens effect. Then, $z = 1 + \sqrt{n}$ and $c = 0.69$ for an average viewing surround.

$n$ is defined as the ratio of luminances between the background and the accepted white in the conditions of viewing, such that $n = \frac{L_{background}}{L_{white}}$. For normal conditions, $L_{background} = 0.2$ (middle-grey referred to diffuse white) and $L_{white} = 1$ (diffuse white), which yields $n = 0.2$, $z = 1.4472$, and finally $cz \approx 1$. So it appears that $c$ is adjusted for $cz$ to be a no-operation in normal conditions.

While the conditions of viewing can be assumed to be average if the image editor follows ISO recommendations for photography and pre-press worflows, the white level is the one from the possibly HDR scene and will need to be accounted for. I have shown that the $L^*$ coordinate of the darktable UCS correlates with CIE CAM16 $A$, so we will backport this formula from CIE CAM16:

The lightness $J$ in darktable UCS will be defined as follow :

$$J = \left(\frac{L^*}{L^*_{white}}\right)^{cz}$$

where $c$ and $z$ have the same meaning as in CIE CAM16. $L_{background}$ can be assumed to be 20 % all the time, since even HDR images tend to have an average luminance close to middle-grey. $L_{white}$ needs to be radiometric luminance of the scene-referred white of the picture. $L^*_{white}$ is the non-linearily adapted correlate of Munsell value corresponding to the white point of the picture, and $L^*$ is the pixel-wise correlate of Munsell value. Recall that $L^*$ metrics correlated to Munsell value are adjusted such that diffuse white (usually anchored between 80 and 120 Cd/m²) is normalized to 1, so for a purely reflective scene lit evenly, $J = L^*$ directly.

#Colorfulness

The colorfulness as defined by the CIE is an absolute metric, which corresponds to the radial distance to achromatic. We can compute it directly :

$$ M = \sqrt{U^2 + V^2}\label{eqn-colorfulness}$$

#Chroma

The chroma has been largely misunderstood among programmers as the same thing as colorfulness, but the CIE defines it as relative to white. Many models like CIE Lab or CIE Luv and so on are bounded by diffuse white and since diffuse white is normalized to 1 or 100 %, chroma becomes to all intents and purposes colorfulness. But the concept remains : chroma is colorfulness divided by the achromatic response of white, and the fact that this response being normalized to 1 makes the division pointless doesn’t change it.

In darktable UCS, the Munsell chroma is only weakly correlated to colorfulness ($R^2 = 0.73$). Before carrying on, I propose to improve the 1D correlate by fitting $C = f(M, L)$. The proprosed chroma correlate is :

$$ \begin{cases} C &= 0 &\text{ if } L = 0 \\ C &= \frac{2.78288575869163}{L^*_{white}} \cdot \left(L^* \left(\dfrac{M}{L^*}\right)^{0.648780286421352}\right)^{1.73754096076991} &\text{else} \end{cases}\label{eqn-chroma} $$

#Objective evaluation

#Plots

I plot below the Munsell colours into darktable UCS $(L^*, C)$ planes of constant hue :

This shows some interesting properties of the colour space :

| Munsell hue | Oklab hue deviation | dt UCS hue deviation | Oklab saturation deviation | dt UCS saturation deviation |

|---|---|---|---|---|

| 10 RP | 0.116 | 0.128 | 0.179 | 0.029 |

| 2.5 R | 0.109 | 0.115 | 0.162 | 0.025 |

| 5 R | 0.109 | 0.115 | 0.164 | 0.030 |

| 7.5 R | 0.113 | 0.122 | 0.163 | 0.031 |

| 10 R | 0.128 | 0.135 | 0.163 | 0.030 |

| 2.5 YR | 0.111 | 0.115 | 0.130 | 0.021 |

| 5 YR | 0.081 | 0.090 | 0.100 | 0.019 |

| 7.5 YR | 0.074 | 0.074 | 0.091 | 0.017 |

| 10 YR | 0.062 | 0.071 | 0.087 | 0.019 |

| 2.5 Y | 0.045 | 0.060 | 0.082 | 0.018 |

| 5 Y | 0.044 | 0.050 | 0.078 | 0.018 |

| 7.5 Y | 0.029 | 0.027 | 0.077 | 0.017 |

| 10 Y | 0.020 | 0.013 | 0.076 | 0.016 |

| 2.5 GY | 0.026 | 0.024 | 0.075 | 0.014 |

| 5 GY | 0.047 | 0.057 | 0.084 | 0.013 |

| 7.5 GY | 0.047 | 0.063 | 0.089 | 0.015 |

| 10 GY | 0.051 | 0.071 | 0.107 | 0.024 |

| 2.5 G | 0.063 | 0.068 | 0.136 | 0.029 |

| 5 G | 0.054 | 0.057 | 0.140 | 0.020 |

| 7.5 G | 0.048 | 0.051 | 0.141 | 0.015 |

| 10 G | 0.043 | 0.054 | 0.141 | 0.011 |

| 2.5 BG | 0.050 | 0.076 | 0.136 | 0.011 |

| 5 BG | 0.058 | 0.104 | 0.121 | 0.010 |

| 7.5 BG | 0.054 | 0.110 | 0.116 | 0.017 |

| 10 BG | 0.060 | 0.126 | 0.112 | 0.030 |

| 2.5 B | 0.058 | 0.126 | 0.113 | 0.043 |

| 5 B | 0.055 | 0.125 | 0.118 | 0.057 |

| 7.5 B | 0.072 | 0.120 | 0.135 | 0.067 |

| 10 B | 0.101 | 0.097 | 0.138 | 0.071 |

| 2.5 PB | 0.118 | 0.070 | 0.158 | 0.076 |

| 5 PB | 0.148 | 0.052 | 0.186 | 0.082 |

| 7.5 PB | 0.107 | 0.096 | 0.564 | 0.197 |

| 10 PB | 0.089 | 0.063 | 0.422 | 0.120 |

| 2.5 P | 0.077 | 0.045 | 0.356 | 0.100 |

| 5 P | 0.060 | 0.033 | 0.300 | 0.080 |

| 7.5 P | 0.020 | 0.040 | 0.256 | 0.070 |

| 10 P | 0.045 | 0.073 | 0.226 | 0.060 |

| 2.5 RP | 0.069 | 0.099 | 0.210 | 0.052 |

| 5 RP | 0.097 | 0.124 | 0.196 | 0.040 |

| 7.5 RP | 0.105 | 0.124 | 0.179 | 0.029 |

| TOTAL | 0.4948 | 0.5590 | 1.20 | 0.35 |

Lower errors diverging by more than 5% of relative magnitude are emphasized.

The darktable UCS space shows a globally superior hue-linearity error, except in the purple-blue region. It is still very close (within ±5%) from Oklab in the red, yellow and green region. I didn’t try hard to fit for hue-lineary and Abney effect since no dataset of constant hue loci seems to agree with the others, except on the fact that yellow (570 nm, roughly Munsell 10 Y) is a straight line in CIE xyY :

As it turns out, the Munsell 10Y is also the minimum of hue average deviation for both models.

Where the darktable UCS actually shines is in its correlation to Munsell saturation, taken as the RMSE between $\frac{\text{Munsell chroma} / 20}{\text{Munsell value} / 10}$ and $\frac{\text{model chroma}}{\text{model lightness}}$. This models the scaling of the chroma axis relatively to the lightness axis, and therefore the 2D uniformity of the delta E. It is of critical importance since we are planning to travel $(L, c)$ plane in diagonal directions. The darktable UCS not only has a lower saturation error, but it is more evenly distributed along the visible locus. This can also be observed from the graphs of constant hue, where the mesh formed by lines of equal lightness and equal chroma appears more square in darktable UCS than in Oklab.

The overall better behavious (hue linearity and saturation error) of darktable UCS is notable in the blue-purple region, which is the typically pathological point of all perceptual colour spaces and the region where the worst out-of-gamut colours appear in display RGB. This makes it much more stable for future gamut-compression tasks.

#Predictions

The next thing to assess is how opponent additive colours are recorded in terms of hue. I have already shown in the sRGB book of colour that, in JzAzBz, the cyan complementary of sRGB/Rec709 red is not over a -180° hue angle fom the sRGB red. The property of opponent colours to produce achromatic light makes for a much more robust definition of colour opponency.

| Colour | sRGB coordinates | Luminance Y | Oklab hue | darktable UCS hue | darktable UCS colorfulness |

|---|---|---|---|---|---|

| Red | $(1, 0, 0)$ | 0.214 | 29° | 19° | 0.7659 |

| Yellow | $(1, 1, 0)$ | 0.927 | 110° | 101° | 0.3640 |

| Green | $(0, 1, 0)$ | 0.713 | 142° | 137° | 0.5977 |

| Cyan | $(0, 1, 1)$ | 0.786 | -165° | -160° | 0.2504 |

| Blue | $(0, 0, 1)$ | 0.073 | -95° | -79° | 1.2673 |

| Magenta | $(1, 0, 1)$ | 0.287 | -32° | -42° | 0.6752 |

So we can verify the hue angle of opponent colours :

| Mix | Oklab | darktable UCS | $Y_1 / Y_2$ | $M_2 / M_1$ |

|---|---|---|---|---|

| Red → Cyan | 29° - (-165°) = 194° | 19° - (-160°) = 179° | 0.272 | 0.327 |

| Yellow → Blue | 110° - (-95°) = 205° | 101° - (-79°) = 180° | 12.70 | 3.482 |

| Green → Magenta | 142° - (-32°) = 174° | 137° - (-42°) = 179° | 2.484 | 1.130 |

#Synthetic sweeps

Here are hue stripes produced at constant chroma and lighntess. Stripes are distributed at uniform lightness steps. The chroma is adjusted on each stripe to be as high as possible while remaining inside sRGB space.

The next gradients are hue × lightness at constant chroma. The colours that did not fit within sRGB gamut have been replaced by white.

Finally, the sRGB gamut slices at constant hue containing the primary Red/Cyan, Green/Magenta and Blue/Yellow

The last one, blue-yellow, clearly shows the Abney effect : from pure blue, the gradient takes a leap toward purple. This means that I will have to adjust hues some more after all.

#Hue

#Fitting hue

So far, we have considered the angle on the chromaticity plane $(U, V)$ to be the correlate of hue. We know that it is not completely perceptually accurate, but has the desirable property of keeping opponent colors set aside at 180°, which will be of use for the Brightness-Saturation model. Unfortunately, the sRGB blue gamut slice shows that this is not good enough to manipulate colors at constant hue.

All colour adaptation models deal with hue-linearity by tuning the LMS cone response. That is, each cone response is modeled in a 3D space from the radiometric emission (usually, going through CIE XYZ 1931), and then adapted through a non-linear transform. The product of this, as I showed above, has 2 properties :

- LMS cone space can and will output negative values for positive stimuli,

- complementary colours don’t have an angle of 180° anymore,

- the equi-lightness plane gets slanted.

However, linearizing hue perceptually will be where we pay the price of the previous choices : it will be heavily non-linear, and there is only so much we can do if we choose to ignore the retina’s physiology to work at constant lightness. Finally, the constraint of invertibility prevents us from using excentricity functions of hue like $e(h_{UCS}) = K \cdot \sum_{i=1}^{n} a_i \cos(i\cdot h_{UCS}) + b_i \sin(i \cdot h_{UCS})$, which is typically used to correct colorfulness or chroma by Hunt’s, CIE CAM16 and Nayatani’s models. Correcting hue as a function of itself would be the easiest way, but it is not a bijection and cannot be inverted.

First, we will need to remap the UCS with regard to chroma. Recall that the colorfulness $M$ is the “naive” radial distance to achromatic (equation $\eqref{eqn-colorfulness}$) in $L^*UV$ space. The chroma correlate $C$ is expressed by the equation $\eqref{eqn-chroma}$ as a function of $M$ and $L$. Then:

$$ \begin{align} \theta &= \arctan2(V, U)\\ \begin{bmatrix} U’ \\ V’ \end{bmatrix} &= \begin{bmatrix} C \cos(\theta) \ C \sin(\theta) \end{bmatrix} \end{align} $$

Then, we warp the $(U’, V’)$ space to center it on what appears to be the perceptual center of hues:

$$ \begin{bmatrix}U’’ \\ V’’\end{bmatrix} = M_3 \cdot \begin{bmatrix} U’ \\ V’ \end{bmatrix}\\ $$ $$ M_3 = \begin{bmatrix} 0.76426712090492599 & 0.20054726807320489 \\ -0.16136815864400481 & 0.55140064490679086 \end{bmatrix} \cdot $$

Then, we adapt non-linearily the $(U’’, V’’)$ space using independent Michaelis-Menten equation :

$$\begin{align} \alpha &= sign(U’’) \frac{0.74620661742486705 \cdot |U’’|^{1.1091628757564669}}{|U’’|^{1.1091628757564669} + 1.2697466043995993} \\ \beta &= sign(V’’) \frac{1.2008251904267178 \cdot |V’’|^{1.0640450648317035}}{|V’’|^{1.0640450648317035} + 1.429978004863145} \end{align}$$

Finally, we express the hue angle and its distance to the center of the space, which is not achromatic anymore, because of the $O$ offset:

$$ \begin{align} r &= \sqrt{\alpha^2 + \beta^2} \\ \gamma &= \arctan2(\beta, \alpha) \\ H &= 1.0373679054932574 \cdot \gamma + 0.32440889433879067 \end{align} $$

Notice that the radius $r$ is not a direct correlate of chroma anymore, because our space warping has essentially made Munsell chroma circles elliptic again, for the sake of hue-linearity. So $r$ is to be considered as an intermediate variable stored only to make the refined hue correlate invertible to original chroma and angle.

This radius $r$ can be easily fitted once again against Munsell chroma, with the following model:

$$ \begin{align} E(H) =& i + a \cos(H) + b \sin(H) + c\cos(2H) + d \sin(2H) \\ &+ e \cos(3H) + f \sin(3H) + g\cos(4H) + h \sin(4H) \nonumber\\ C’ =& 2.6966804 \cdot (E(H) \cdot r)^{1.26617339} \label{eqn-chroma-r} \end{align} $$

with:

- $a = 0.1168930$

- $b = 0.08057494$

- $c = 0.06451043$

- $d = 0.00929771$

- $e = -0.11172693$

- $f = -0.11981897$

- $g = 0.03453699$

- $h = 0.08214589$

- $i = 1.44146029$

The correlation is very good, with $R^2 = 0.97$ :

The improved accuracy is mostly due to the excentricity factor $e(H)$, because setting $e(H) = 1$ and fitting for the two remaining parameters yields an $R² = 0.929$, which is worse than $C$ at the previous step. This is actually consistent with the fact that Munsell chroma circles have been made elliptic in this hue-linear space

Going to the refined chroma estimate $C’$ to the radius $r$ is done with the following equation:

$$r = \left(\dfrac{C}{2.6966804}\right)^{1 / 1.26617339} \cdot \dfrac{1}{E(H)}$$

where $E(H)$ is the excentricity gain as defined above.

The reverse transform yields:

$$ \begin{align} \gamma &= \frac{H - 0.32440889433879067}{1.0373679054932574} \\ \alpha &= r \cos(\gamma)\\ \beta &= r \sin(\gamma)\\ U’’ &= sign(\alpha) \left(\frac{-1.2697466043995993 * |\alpha|}{|\alpha| - 0.74620661742486705}\right)^\frac{1}{1.1091628757564669} \\ V’’ &= sign(\beta) \left(\frac{-1.429978004863145 * |\beta|}{|\beta| - 1.2398430649704526}\right)^\frac{1}{1.0640450648317035} \\ \begin{bmatrix}U’ \\ V’\end{bmatrix} &= \begin{bmatrix} 1.2151295 & - 0.44194889 \\ 0.35560932 & 1.6842264 \end{bmatrix} \begin{bmatrix}U’’ \\ V’’\end{bmatrix} \\ \theta &= \arctan2(V’, U’) \\ C &= \sqrt{U’^2 + V’^2} \end{align} $$

It is worth noting that we now have a $(\theta, C)$ space of even chroma (circular Munsell chroma rings), defined at the previous step, where $\theta$ is an inaccurate correlate of hue, and a $(H, r)$ space of even hue (linear Munsell hue lines) where $r$ is an inaccurate correlate of chroma. Both spaces are half-specified in terms of perceptual correlates. Additionnaly, complementary colours are not set apart by an angle of 180° in this space, so it looses any additivity property for complementary colours. However, it gets much better perceptual evenness.

#Manipulating real colours at constant hue and chroma

If we are to manipulate both chroma at constant hue and hue at constant chroma from an original known colour, we need to interleave both models:

On the above principle schematic, the black coordinates refer to the $(\theta, C)$ space and the grey coordinates refer to the $(H, r)$ space. $W_C$ is the origin of the $(\theta, C)$ space, which is D65 illuminant by design, and $W_H$ is the origin of the $(H, r)$ space, moved from D65 at constant lightness by an offset defined in the vector $O$ above. The blue dotted curves represent the loci of constant hues in $(\theta, C)$ space, which the $(H, r)$ aims at making straight for better handling.

Suppose we start at a point of coordinates $(C_1, H_2)$ and we want to map this point for artistic purposes to a new colour of coordinates $(C_2, H_3)$. The point $(C_1, H_2)$ is first expressed in $(\theta, C)$ space by the coordinates $(\theta_1, C_1)$. Since $\theta_1$ cannot be trusted as a correlate of hue, we have to project it to $(H, r)$ space and record its hue $H_2$.

We move to $C_2$ following the radial direction $\theta_1$ in $(\theta, C)$ space. The new coordinate is $(\theta_1, C_2)$ but it has a wrong hue $H_1$ because the $(\theta, C)$ space is not hue-linear. So we need to project $(\theta_1, C_2)$ to the $(H, r)$ space and record its coordinate $(H_1, C_2)$. In there, we substitute the wrong hue $H_1$ with the original hue $H_2$ to get the point $(H_2, C_2)$, which has the new expected chroma at the same hue as the original.

Now comes the hue mapping. From the point $(C_2, H_2)$ expressed in $(H, r)$ space as the coordinates $(H_2, r_2)$, we need to rotate around $W_H$ by an angle $H_3 - H_2$ following along the radius $r_2$. The radius $r_2$ is by no mean a metric of chroma, so the resulting color will have the proper destination hue $H_3$ but a wrong chroma. We could just project the point $(H_3, r_2)$ to the $(\theta, C)$ space and substitute the wrong chroma with $C_2$, but that would be inaccurate because the chroma change would make the colour travel toward $W_C$, meaning the final hue would not be $H_3$. This may be accurate enough in most cases though. To get a proper chroma mapping at constant hue, we would need to solve the equation $C_2 = r_3$ for $r_3$, and then substitute $r_2$ for $r_3$ in $(H, r)$ space.

Alternatively, the correlate of chroma $C’$ can be directly derivated from $r$ using equation $\eqref{eqn-chroma-r}$ and its inverse, then everything can be manipulated from $C’$ and $H$, then converted to $(H, r)$, then to $(\theta, C)$, then finally to Yuv through the reverse chroma → colorfulness.

Fortunately, there are few use cases in actual image processing that require to rotate hues, and most of them are currently done in HSL or Yuv spaces with no complains from users, so the inaccurate way is more likely enough.

#Expressing synthetic colours from perceptual hue and chroma

To create uniform GUI gradients, colour charts and sweeps, we create synthetic colour in perceptual spaces from scratch and project them to display RGB. If we want to define a colour by specifying both its hue and chroma perceptual correlates, we need to use both $(\theta, C)$ and $(H, r)$ spaces, but that will leave us with $r$ and $\theta$ unspecified. To project this colour back to a display space, we need to fill at least a third component such that we have at least one complete set of coordinates in one space. From there, the transforms between spaces are fully defined and revertible.

A synthetic colour of perceptual attributes $(H_s, C_s)$ would have, in each space, the following coordinates:

$$ \begin{bmatrix} H_s \\ C_s \end{bmatrix} \equiv \begin{bmatrix} H_s \\ r_s \end{bmatrix}_{H, r} \equiv \begin{bmatrix} \theta_s \\ C_s \end{bmatrix}_{\theta, C} $$

in which $H_s$ and $r_s$ and both dependent variables from $\theta_s$ and $C_s$. Because of the non-linear transforms containing sign and absolute value heuristics, we cannot express directly nor simply $r_s$ from $C_s$ and $\theta_s$. However, we have expressed above in $\eqref{eqn-chroma-r}$ $C$ as a function of $r$ with a good fitting, and its inverse. Using this method to generate synthetic hue charts yields a more uniform chroma, noticeably more colorful blues compared to other hues (examples below at chroma = 25%):

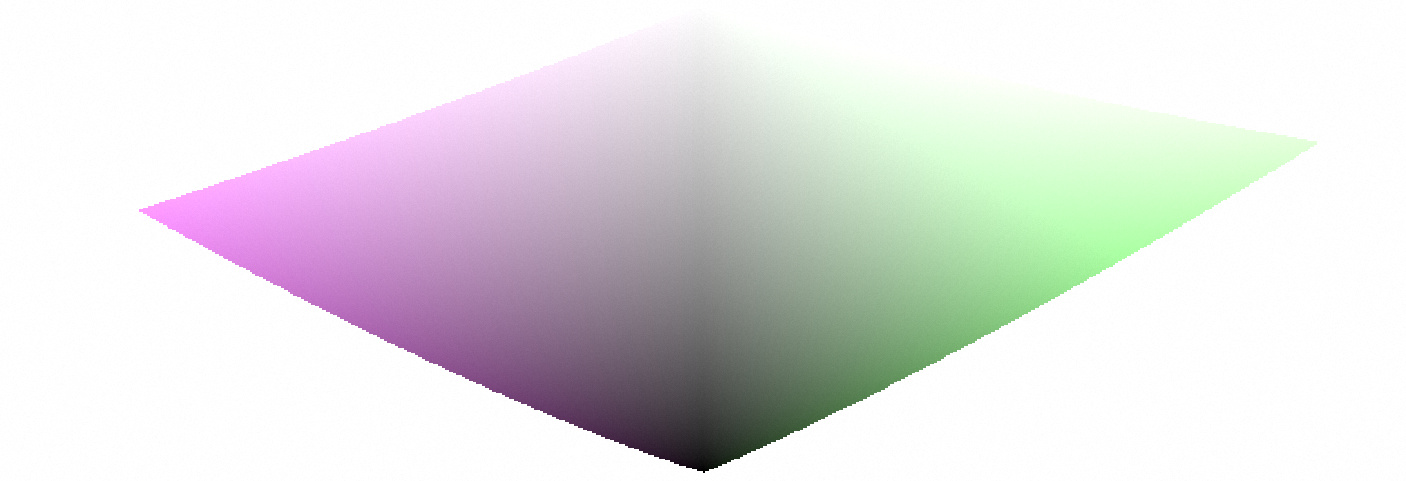

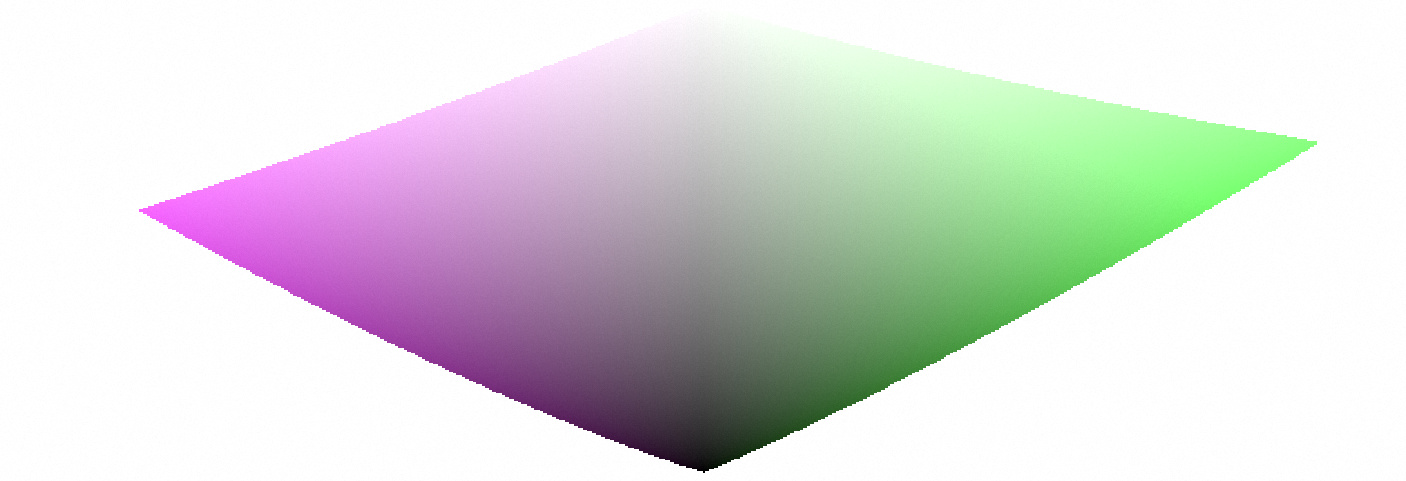

#Synthetic sweeps at improved hue constancy

Hue sweeps at increments of $L^* = 10$. Chroma has been adjusted to the maximum value that fit in sRGB gamut. Compared to the previous stage ($C$ non adjusted for hue with the excentricity equation), the chroma had to be divided by a factor of roughly 1.6 to fit within sRGB gamut, because the blue region fell outside.

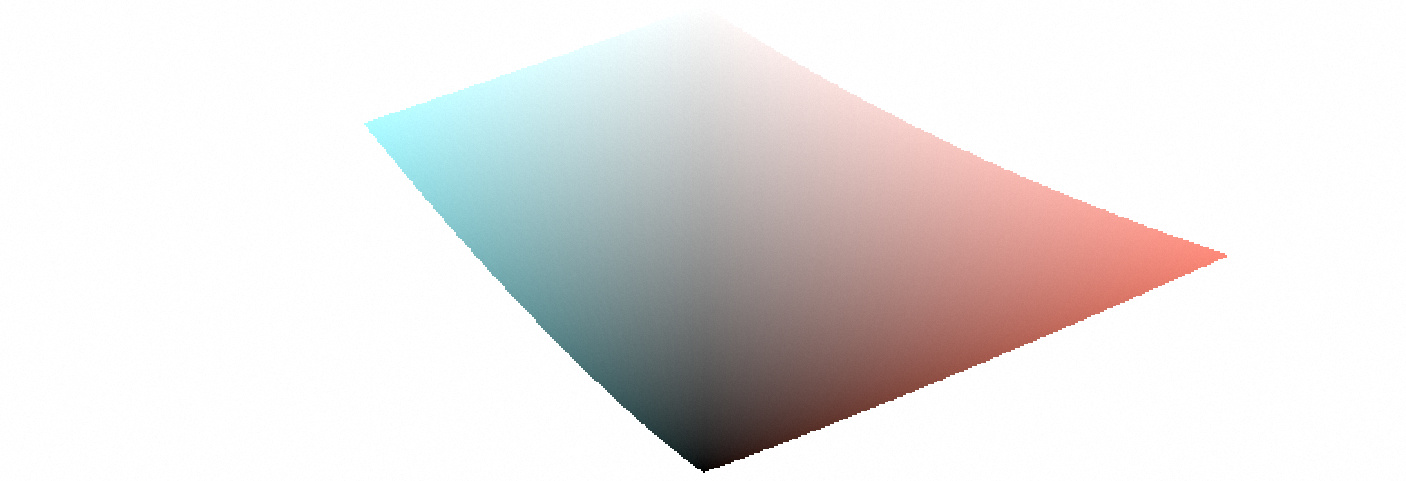

Next, we review the sRGB gamut at constant hue. The first approach is to sweep over radius $r$ and lightness, in $(H, r)$ space:

The gamut is compressed over the chroma axis, which is the trade-off we made for improved hue linearity. This works, as it shows on the blue-yellow, since the gradient from achromatic to sRGB primary blue does not take a leap toward purple anymore. As stated above, the radius $r$ is no longer a direct correlate of chroma, and this can be an issue. We can resolve that with a second approach, by sweeping over chroma as defined as $C’$ and hue as $H$, then using equation $\eqref{eqn-chroma-r}$ to go to $H, r$ space from which we convert to $\theta, C$ space, then back to colourfulness $M$ and YUV. This is shown in the next sweeps, where sRGB colours are displayed in real colours, and colours within the visible locus but outside of sRGB are represented in grey. The scale of both axes is normalized to unit, so the delta E is theoritically circular :

The conclusion from these results is that the $(H, r)$ space is worthless on its own and has merits only as a connection space to the $(H, C’)$ space. This last space has a chroma cusp at the achromatic locus which may be challenging and ill-suited for synthetic gradients that go through achromatic, in which case the chroma correlate $C$ will need to be substituted from the $(\theta, C)$ space, giving a less accurate correlation but smoother.

Though none of the $(H,r)$ and $(H, C’)$ spaces have complementary colours at opposite hue angles (set apart by 180°), the absolute deviation for sRGB primaries is only between 6° and 8° of angle.

#Brightness-Saturation model

The lightness-chroma we have built so far shows good color uniformity, that is a fairly even delta E (colour difference) as well as a good relative scaling between chroma and lightness. We could then quickly express saturation as colourfulness / brightness, but then, how to express brightness ?

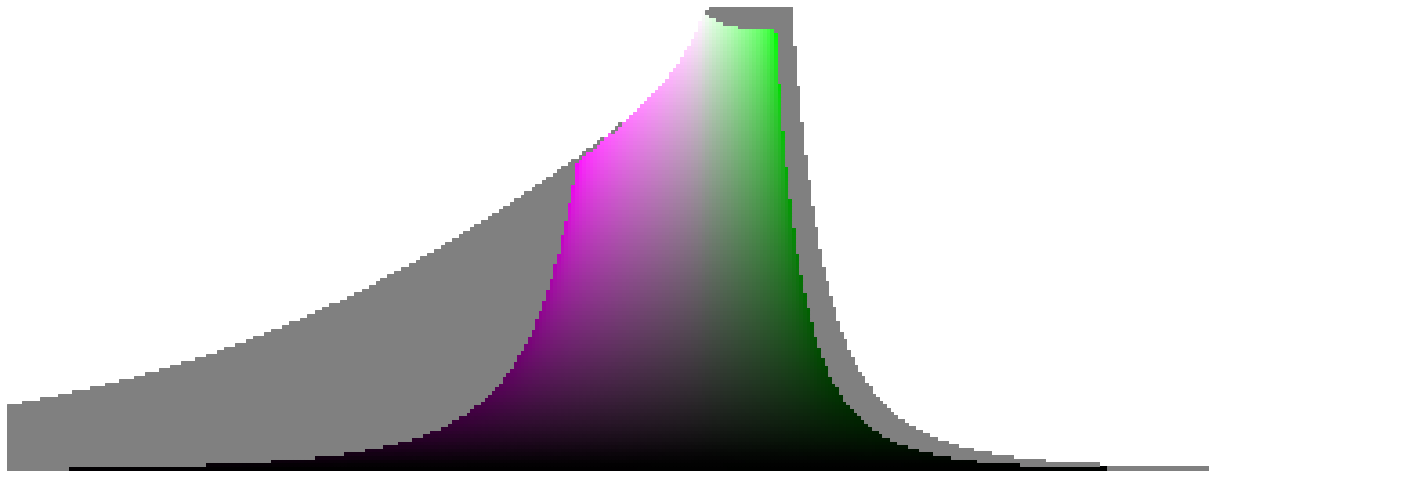

To understand the problem we are facing, let us review hue patches against a background having the same lightness (you are advised to open each image in a new tab and look at it full-screen). In each of the following sweeps, all colours in a row have the same chroma (as predicted by the darktable UCS) and the same lightness, which is also the lightness of the neutral background :

Even though the lightnesses are all the same on the same row, they don’t feel the same : orange, purple-red and turquoise feel more luminous than the others and than the background. So, at constant chroma and lightness, their brightness is different. This is the Helmholtz-Kohlrausch effect. The main reason is chroma and lightness are evaluated against white (which actually shows in the equations) while brightness is evaluated against a grey of the same lightness. But it does not stop there :

Above, we make chroma vary between 10% and 90% at $L^* = 50%$ against a background at $L^* = 50%$. The colours that could not be represented in sRGB gamut have been removed. Here, we see that colours start feeling fluorescent above a certain chroma threshold. This effect is called “fluorence” by Evans & Swenholt11, and the threshold at which it appears is the greyness boundary. In other words, past the greyness boundary, colorful objects appear fluorescent against a background having the same lightness.

From this, we understand several things:

- chroma or colorfulness participates in brightness too, along with lightness,

- the weight of the contribution of colorfulness in brightness depends on hue,

- manipulating chromas for artistic purposes may not have the desired effect on saturation, e.g. it may make some hues cross the greyness boundary quicker than others even in a perceptually-uniform space.

So the purpose of the brightness-saturation model is to take these effects into account.

#Fitting brightness

The Pridmore17 model was first attempted because of its intrinsic elegance, its dependency on first principles and its serious advantage regarding the prediction of complementary colours under the assumption that a mixture of complementary lights at correct luminance (adjusted with MacAdams method13) yields achromatic light. The complete model used was:

$$ \begin{align} Moment(x, y) &= \dfrac{\sqrt{(x - x_n)^2 + (y - y_n)^2}}{y}\\ S_\lambda &= \sqrt{\dfrac{Moment(x_\lambda, y_\lambda)}{Moment(x_{\lambda,c}, y_{\lambda, c})}}\\ b_\lambda &= 1.5 \times S_\lambda^{0.25} \\ p_{ct} &= \left(\dfrac{0.054}{b_{max} \times S_\lambda}\right)^{0.79} \\ f &= \dfrac{0.027}{p_{ct}} \\ p &= \dfrac{C}{C_\lambda} \\ F &= \sqrt{\dfrac{p - p_{ct}}{1 - p_{ct}}} \times (1 - f) + f \\ g &= \sqrt{\dfrac{\max(S_\lambda)}{S_\lambda}} \\ F_g &= \dfrac{F + g - 1}{g} \\ b &= 1.5 \times S_\lambda^{0.27} \times p \times F_g + (1 - p) \\ B &= b \times L \\ S &= b^2 \times Moment(x,y) \end{align} $$

where:

- $B$ is the brightness,

- $S$ is the saturation,

- $(x, y)$ are the CIE 1931 xyY chromaticity coordinates of the current colour being evaluated,

- $(x_n, y_n)$ are the CIE 1931 xyY chromaticity coordinates of the achromatic reference (illuminant),

- $(x_\lambda, y_\lambda)$ are the CIE 1931 xyY chromaticity coordinates of the monochromatic wavelength associated with the current chromaticity (boundary colour of the visible locus colinear with $(x_n, y_n)$ and $(x, y)$),

- $(x_{\lambda,c}, y_{\lambda, c})$ are the CIE 1931 xyY chromaticity coordinates of the complementary colour of $(x_\lambda, y_\lambda)$ (boundary colour of the visible locus colinear with $(x_\lambda, y_\lambda)$ and $(x_n, y_n)$),

- $p$ is the purity associated with $(x, y)$ such that $p(x_n, y_n) = 0$ and $p(x_\lambda, y_\lambda) = 1$, and $p$ is perceptually scaled between the achromatic and boundary colours. Therefore, we use the chroma $C$ from the darktable UCS and normalize it by $C_\lambda$, the chroma of the associated boundary colour,

- $p_{ct}$ is the purity threshold corresponding to a Just Noticeable Difference between achromatic light and chromatic light for the current hue.

The numerical constants can be tuned to better match experimental datasets. However, this model suffers from an important limitation : it cannot be inverted. Indeed, it has a double and non-linear dependency to the distance to achromatic, through the purity $p$ (derivated from $C$) and the MacAdams moment. More importantly, this distance is expressed in different spaces, so there is no closed-form expression that could allow to manipulate the colour saturation and brightness, then bring it back to XYZ.

So I need to find something else.

It is useful to note that the Hunt model, inherited by CIE CAM97 and finally by the Hellwig-Fairchild-Stolitzka20 modification to CIE CAM16 correct the non-linear lightness of the achromatic signal $J_A$ for Helmholtz-Kohlrausch effect as follow:

$$J_{HK} = J_A + \alpha C^\beta$$.

That is, a linear combination of chroma and achromatic lightness, with an optional non-linear compression applied on chroma depending on models. The Pridmore model applies a gain over the linear (non adapted) achromatic lightness. If we accept that the non-linear cone adaptation based on Naka-Rushton/Michaelis-Menten resembles in principle a logarithm, then:

$$ \begin{align} B &= b(C) \times L \\ \Rightarrow \log(B) &= \log(b(C)) + \log(L) \\ \equiv J_{HK} &= \alpha C^\beta + J_A \end{align} $$

So the two formulations might well be the same equation seen from a logarithmic or from a linear vantage point. After trial-and-error modelling over the darktable UCS, I propose the following model:

$$B = (1 + 0.10111571 \times C’) \times J$$

where $C’$ is taken from the chroma refinement (equation $\eqref{eqn-chroma-r}$) at constant hue $H$, from the $(H, r)$ space. For neutral colours, $C’ = 0$ which yields $B = J$ as expected.

The fitting was done against 2 datasets:

- Withouck et Al. (2013)21 : 9 observers matching the luminance of the achromatic light in temporal juxtaposition with chromatic light at 51 Cd/m² in dark conditions (unrelated stimuli) – 58 samples

- Sanders & Wyszecki (1963)22: 20 observers matching the luminance of the achromatic light against chromatic lights at 20 Cd/m² in average conditions (related stimuli) – 96 samples.

The validation is done against 2 more datasets:

- Fairchild & Pirotta (1990)23: 11 observers matching reflective paper tiles of Munsell colours matched against neutral tiles at variout lightnesses in average conditions (related stimuli) — 36 samples,

- Wyszecki (1967)24: 76 observers matching ceramic tiles of Munsell colours against neutral tiles at around 30 Cd/m² in average conditions (related stimuli) – 43 samples.

Notes :

- Chromaticity coordinates for Withouck et Al. (2013) and Sanders & Wyszecki (1963) are given for the CIE 1964 10° observer, while the two other studies provide chromaticity coordinates for the CIE 1931 2° observer. An attempt to derivate an approximate matrice transform from synthetic spectra, using color matching functions, to express all data in CIE 1931 2° was made and led to worse fitting than using no correction, so it was abandoned and the datasets are used as-is.

- Studies use various synthetic and natural illuminants resembling CIE C illuminant, but with CCT varying from 6200 to 6900 K. No attempt was made to normalize illuminants to D65 since 3 over 4 studies use a mixture of artificial and natural illuminants.

- While the Withouck et Al. (2013) is the only experiment done in dark surround, adapting to Stevens effect through the CIE CAM16 correction led to worse fitting, so it was abandonned as well.

The graph above shows the fitting of the datasets for the proposed model. It is worth mentionning that the global R² coefficient is 0.77 before any fitting because the different datasets are evenly spread over the luminance axis. The reflective datasets seem irreconciliable with the emissive datasets and any attempted fitting that succeeded on one failed on the other, so the emissive (self-luminous) case was favoured because modern image processing is made on emissive display. The Wyszecki 1967 (reflective) shows a negative R², but looking closer, we see that the cluster of points is indeed aligned with the identity line only with an offset. This may point towards experimental mistakes and will not be investigated further. Finally, the Fairchild & Pirotta datasets shows good correlation only because it is spread over the brightness axis, there is no clear trend shown in the clusters of points.

Withouck et al.21 report that no significant dependency of the Helmholtz-Kohlrausch effect upon hue could be found, while all other models (Hunt, CIE CAM16, Nayatani and Hellwig modified CIE CAM1620) use an excentricity factor to correct chroma for hues. I second this observation but I would like to point that I use an excentricity factor on the chroma correlate $C’$ earlier in the model. This might only indicate that other models have a poor fitting of the chroma correlate in the first place, and the HKE only makes this error more obvious to a point where the correction is non-optional. It might be worth considering a fix earlier on the chroma correlate.

Finally, fitting for brightness against $C$ instead of $C’$ (as defined in the first part of the model, lightness-chroma) yields better correlations with experimental data. Since this estimate of chroma is less accurate and not hue-linear, compared to $C’$, it might average experimental discrepancies better.

#Saturation

As per the CIE definition, saturation derivates from chroma and brightness as follow:

$$S = \dfrac{C’}{B}$$

#Synthetic brightness-saturation sweeps

Below, we make hue vary at constant lightness and chroma, then at constant brightness and saturation:

Compared to the lightness-chroma variant, the brightness-saturation variant shows marginally darker red and blue, but the difference is barely perceptible. Bear in mind, however, that this sweeps are displayed on a white background, which is a lightness setting. The next setting is indeed a brightness setting, with hue patches at constant saturation or chroma against a grey background having the same lightness or brightness (50 %):

(It is better to open each image in full-screen and to display it against a grey background).

Then, we review saturation sweeps at constant brightness :

The sRGB gamut slices at constant hues and varying saturation and brightness, containing the space primaries follow. In grey, I have represented colours lying within the visible locus but outside the sRGB gamut to get a sense of the visible locus shape on a brightness-saturation plane. The scale of both axes is normalized to unit :

These graphs show a problem. Recall that our original goal was an expression of saturation that would smoothly degrade red into white trough all shades of pink, and similarly for all other colours. What we have here is a colorimetric saturation, as per the CIE definition, that is a reweighted chroma for even perceptual brightness, and a brightness that essentially neutralizes the chroma component in the perceived lightness. But moving primary colours toward achromatic still yields grey and that is still not what we are after. We want the painter’s saturation.

However, these graphs show clearly the benefit of manipulating saturation over chroma in an artistic image-processing pipeline : the gamut represented in lightness-chroma space has a narrow, pointy end near black. As a result, increasing chroma in the lower gamut cone has an high probability of pushing colours outside of the gamut boundary. But since the saturation is the chroma divided by the brightness, the representation of the gamut in brightness-saturation space shows an asymptotic behaviour near black, and on the whole lower gamut cone for that matter. This makes the saturation more uniform (closer to a cylinder than a cone) over the brightness axis and thus the saturation control is more even too, reducing the risk of pushing valid colours out of the gamut.

#Painter’s saturation

Though the saturation-brightness model is an nice improvement over the classical chroma-lightness, it still doesn’t achieve our initial goal : degrading primaries into pastels, and finally into white. For this, we will need some rotations and distortions of the saturation-brightness space.

Let us start with $W$, that could be called “whiteness”. Using an additive light framework, whiteness will be maximal for highly emissive colours, that is white and RGB primaries (or even monochromatic lights from the boundary of the visible locus. Therefore:

$$ W = \sqrt{(1 - B)^2 + S^2} $$

So we can create a $(W, S)$ space. However, the graph will only occupy the $± \pi / 4$ angular sector around the $W$ axis, so we also need to open this angle. Using polar coordinates, we can force a rotation of the $S$ axis to create the $(W’, S’)$ space such that:

$$ \begin{align} r &= \sqrt{W^2 + S^2} = \sqrt{W’^2 + S’^2} \\ \gamma &= \arctan2(W, S) \\ \theta &= \dfrac{\gamma - \pi / 4}{\pi / 2 - \pi / 4} \cdot \pi / 2 \\ W’ & = r \cos(\theta)\\ S’ &= r \sin(\theta) \end{align} $$

The reverse model yields:

$$ \begin{align} \theta &= \arctan2(W’, S’) \\ \gamma &= \dfrac{\theta}{\pi / 2} \cdot \left(\frac{\pi}{2} - \frac{\pi}{4}\right) + \frac{\pi}{4}\\ S &= \sqrt{\dfrac{r^2}{1 + \tan(\gamma)^2}} \\ W &= \sqrt{r^2 - S^2}\\ B &= 1 \pm \sqrt{W^2 - S^2} \end{align} $$

Now, the sRGB gamut appears as follow (grey represents colours within the visible locus but outside of sRGB gamut):

On the vertical axis, all achromatic colours are near-white, so moving colours along the horizontal direction indeed degrades toward pastels or primaries. Moving colours along the vertical direction makes colours more or less emmisive.

#Flatening the model and planning for gamut mapping

#Reformulation of hypotheses and models